Have you ever wondered how popular search engines like Google, Yahoo, and Bing can search among millions of web pages and provide you with the most relevant articles for your search in a matter of milliseconds?

They achieve this using a bot, called a web crawler. It surfs the internet, collects relevant links, and stores them. This is particularly useful in search engines and even web scraping. It is possible to code this web crawler on your own. All you need is to know some basic prerequisites of Python programming language.

If you are looking for alternatives that don’t require coding, don’t worry, we have got you covered. This article aims to explore both the coding and non-coding methods of creating a web crawler.

Python Alternative: Create Web Crawler Without Coding

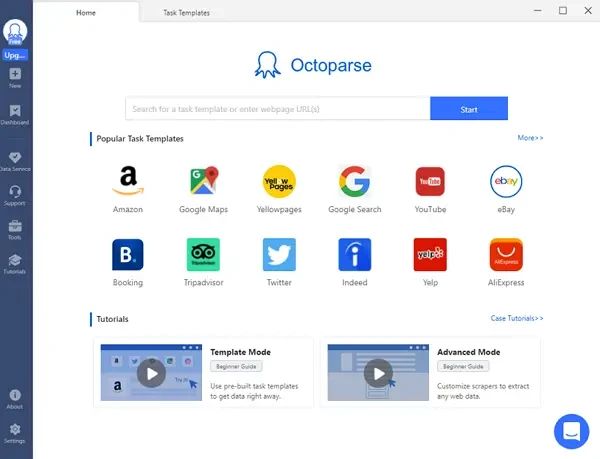

You can construct a web crawler using Python, although it requires some amount of knowledge in coding. Are there Python alternatives to creating web crawlers? Free web crawlers for beginners are available to finish your web scraping without coding skills. The best one is Octoparse.

Octoparse is a user-friendly web scraping tool. It is one of the most widely-used tools to extract bulk data from multiple websites. It supports up to 10,000 links in one go. Some of the most attractive features of Octoparse are listed below:

- It is easy to use even if you know nothing about coding.

- Auto-detection function to help you make crawler much easier.

- Export the extracted data in multiple file formats and database.

- Preset templates for hot websites to scrape data with clicks.

- Scraping tasks can be scheduled at any time – hourly, daily, or weekly.

- The IP rotation mechanism prevents your IP from being blocked.

How to Create A Web Crawler with Python from Scratch

Python provides multiple libraries and frameworks to create a web crawler with ease. The two main methods widely used for web scraping are:

- Web crawler using Python BeautifulSoup library.

- Web crawler using Python Scrapy framework.

Before we get into the coding part, let us discuss some pros and cons of each method.

Pros of Scrapy

- It is a web scraping framework and not a python library.

- It is open source.

- Performance is faster compared to other methods of web scraping.

- Scrapy’s development community is vast and powerful compared to other communities of web scraping.

Cons of Scrapy

- It is slightly more complex compared to other methods of web scraping.

- It contains heavier code not suitable for small-scale projects.

- Documentation is not appreciably understandable for beginners.

Pros of BeautifulSoup

- BeautifulSoup is easy to use and beginner friendly.

- Perfect for small projects as it is lightweight and less complex.

- Easily understandable documentation for beginners.

Cons of BeautifulSoup

- It is slower compared to other methods of web scraping.

- It cannot be upscaled to more significant projects.

- It has an external python dependency.

Build a web crawler with Python BeautifulSoup

In this method, we will try to download statistical data regarding the effects of Coronavirus from the Worldometers website. This is a very interesting type of application that can be useful for data mining and storage with web scraping.

Code for reference:

Learn more about Beautiful Soup in web scraping and its alternative.

Make a web crawler using Python Scrapy

In this simple example, we are trying to scrape data from amazon. Since scrapy provides a framework of its own we do not need to create a code file. We can achieve the desired results by entering simple commands in the scrapy shell interface.

1. Setting up Scrapy

- Open your cmd prompt.

- Run the command:

- “ pip install scrapy “

- Once the scrapy is installed, type the command:

- “ scrapy shell ”.

- This will start the scrapy command line interface within the cmd prompt.

2. Fetching the website

- Use the fetch command to get the target webpage as a response object.

- fetch(‘https://www.amazon.in/s?k=headphones&ref=nb_sb_noss’)

- You will notice that the command line will return True.

- Now open the retrieved webpage using the command:

- view(response)

- This will open the webpage in the default browser.

3. Extracting Data from the website

- Right-click the first product title on the page and select inspect element.

- You will notice it had many CSS classes associated with it

- Copy one of them on your clipboard.

- Run the command on the scrapy shell :

- response.css(‘class_name::text’).extract_first()

- You will notice that the command returns the name of the first product present on the page.

- If this is successful proceed to extract all the names of the product.

- response.css(‘class_name::text’).extract()

- You will notice the list of products on the page displayed in the scrapy shell interface.

You can also find more information about Scrapy and its web scraping alternative.

Web scraping is a handy method when it comes to acquiring information for free from publicly accessible databases. It eases the manual labor that goes into downloading bulk data. If trained properly, it can prove to be a very useful skill for commercial and professional purposes.

Final Words

There are numerous methods in web scraping, out of which two are explained in this article. Each method has its pros and cons, its level of ease and complexity of the application. The method that should be used for a particular project varies with the project’s parameters. Hence, it may be necessary for a developer to learn multiple methods to web scraping. I hope this article reinforces your understanding of web scraping and inspires you in your web scraping journey.