“Can you pull data from websites to Excel?”

You may have similar questions above when you want to download data from a website, as Excel is an easy and common tool for data collection and analysis. With Excel, you can easily accomplish simple tasks like sorting, filtering, and outlining data and making charts based on them. When the data are highly structured, we can even perform advanced data analysis using pivot and regression models in Excel.

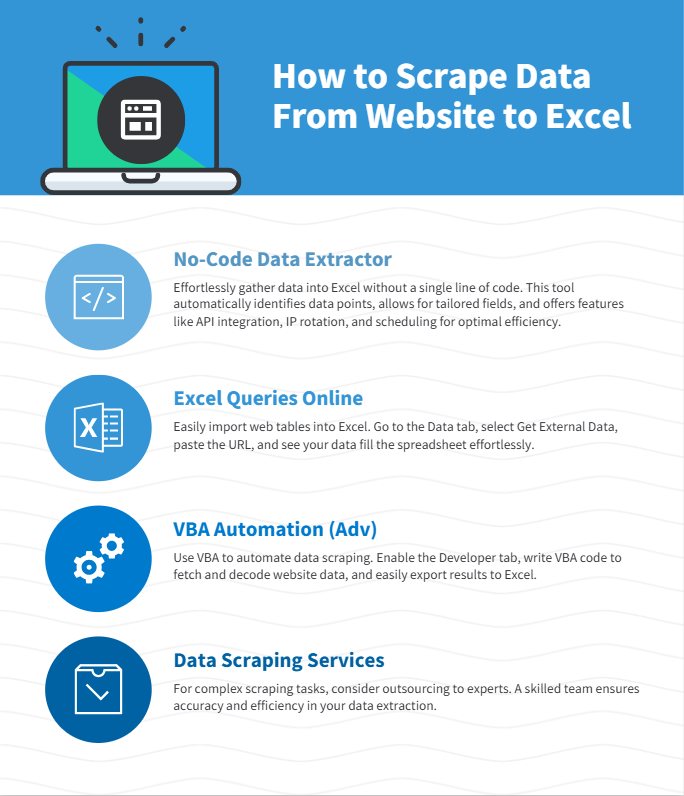

However, it is an extremely tedious task if you collect data manually by repetitive typing, searching, copying, and pasting. To solve this problem, we list 3 different solutions to scrape websites to Excel easily and quickly.

(Feel free to use this infographic on your site, but please provide credit and a link back to our blog URL using the embed code below.)

Method 1: No-Coding Crawler to Scrape Website to Excel

Web scraping is the most flexible way to get all kinds of data from webpages to Excel files. Many users feel hard because they have no idea about coding, however, an easy web scraping tool like Octoparse can help you scrape data from websites to Excel without any coding.

As an easy web scraper, Octoparse provides auto-detecting functions based on AI to extract data automatically. What you need to do is just check and make some modifications. What’s more, Octoparse has advanced functions like API access, IP rotation, cloud service, and scheduled scraping, etc. to help you get more data.

Turn website data into structured Excel, CSV, Google Sheets, and your database directly.

Scrape data easily with auto-detecting functions, no coding skills are required.

Preset scraping templates for hot websites to get data in clicks.

Never get blocked with IP proxies and advanced API.

Cloud service to schedule data scraping at any time you want.

Here is a video guide on how to extract data from any website to Excel, you can get some ideas after watching it. Or, you can follow the simple steps in the next parts to scrape website data into Excel without any coding.

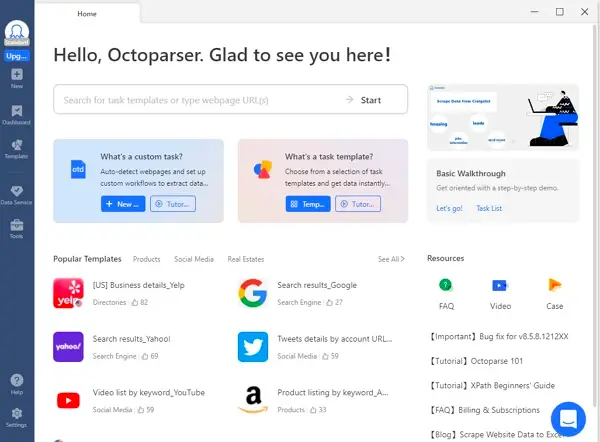

Online data scraping templates

You can also use the preset data scraping templates for popular sites like Amazon, eBay, LinkedIn, Google Maps, etc., to get the webpage data with several clicks. Try the online scraping template below without downloading any software to your devices.

https://www.octoparse.com/template/contact-details-scraper

3 steps to scrape data from website to Excel

Step 1: Paste target website URL to begin auto-detecting.

After downloading Octoparse and installing on your device quickly, you can paste the site link you want to scrape and Octoparse will start auto-detecting.

Step 2: Customize the data field you want to extract.

A workflow will be created after auto-detection. You can easily change the data field according to your needs. There will be a Tips panel and you can follow the hints it gives.

Step 3: Download scraped website data into Excel

Run the task after you have checked all data fields. You can download the scraped data quickly in Excel/CSV formats to your local device, or save them to a database.

Web scraping project customer service

If time is your most valuable asset, and you want to focus on your core businesses, outsourcing such complicated work to a proficient web scraping team that has experience and expertise might be the best option. Data scraping is difficult to scrape data from websites due to the fact that the presence of anti-scraping bots will restrain the practice of web scraping. A proficient web scraping team would help you get data from websites properly and deliver structured data to you in an Excel sheet, or in any format you need.

Here are some customer stories that how Octoparse web scraping service helps businesses of all sizes.

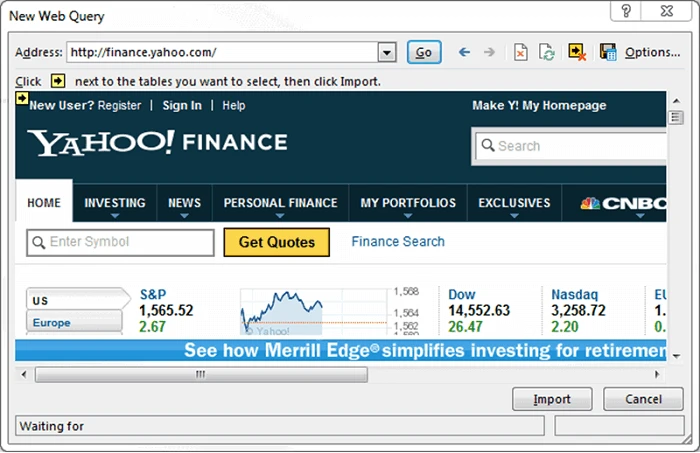

Method 2: Excel Web Queries to Scrape Website

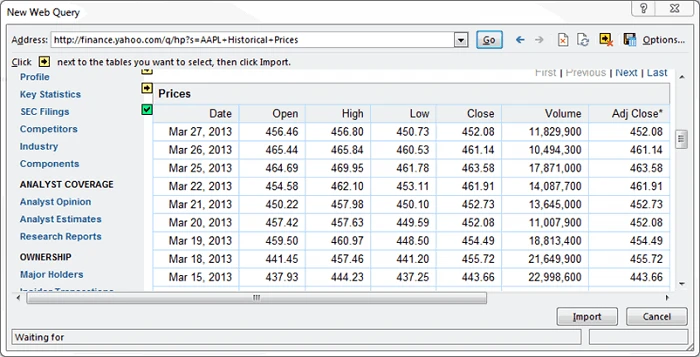

Except for transforming data from a web page manually by copying and pasting, Excel Web Queries are used to quickly retrieve data from a standard web page into an Excel worksheet. It can automatically detect tables embedded in the web page’s HTML. Excel Web queries can also be used in situations where a standard ODBC (Open Database Connectivity) connection gets hard to create or maintain. You can directly scrape a table from any website using Excel Web Queries.

6 steps to extract website data with Excel web queries

Step 1: Go to Data > Get External Data > From Web.

Step 2: A browser window named “New Web Query” will appear.

Step 3: In the address bar, write the web address.

Step 4: The page will load and will show yellow icons against data/tables.

Step 5: Select the appropriate one.

Step 6: Press the Import button.

Now you have the web data scraped into the Excel Worksheet – perfectly arranged in rows and columns as you like. Or you can check out from this link.

Method 3: Scrape Website with Excel VBA

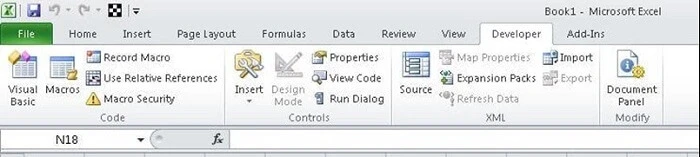

Most of us would use formula’s in Excel (e.g. =avg(…), =sum(…), =if(…), etc.) a lot, but are less familiar with the built-in language – Visual Basic for Application a.k.a VBA. It’s commonly known as “Macros” and such Excel files are saved as a **.xlsm. Before using it, you need to first enable the Developer tab in the ribbon (right-click File -> Customize Ribbon -> check Developer tab). Then set up your layout. In this developer interface, you can write VBA code attached to various events. Click HERE to getting started with VBA in Excel 2010.

Using Excel VBA is going to be a bit technical – this is not very friendly for non-programmers among us. VBA works by running macros, step-by-step procedures written in Excel Visual Basic.

6 Steps to scrape website data using Excel VBA

Step 1: Open Excel and add a new module by going to the Visual Basic Editor (ALT + F11).

Step 2: Import the MSXML2 and MSHTML libraries. This allows you to interact with websites:

Step 3: Declare variables for the XMLHTTP object and HTML document:

Step 4: Use XMLHTTP to make a GET request to the target URL and parse the response into an HTML document:

Step 5: Extract the needed data using DOM navigation/selectors, and export the scraped data to Excel.

Step 6: Clean up variables for memory management. Repeat the steps to scrape multiple pages if needed.

Final Words

Now, you have learned 3 different ways to pull data from any website to Excel. Choose the most suitable one according to your situation. However, Octoparse is always the right choice if you don’t have any coding skills, or you want to save time and energy on data collection work.