Have you ever thought that Google sheet can do the web scraping for you? The truth is, as a powerful cloud-based tool, Google Sheets can scrape data from websites by using 5 functions! With the scraped data, you can share it directly with your team or friends or integrate it with other third-party tools.

By reading the following parts, you can learn detailed steps on how to leverage these 5 functions, as well as another automated scraping tool that makes the whole task even easier.

5 functions of Google Sheets for Web Scraping

Method 1: Using ImportXML in Google Spreadsheets

ImportXML is a function in Google Spreadsheets that allows you to import data from structured sources like XML, HTML, CSV, TSV, and RSS feeds using XPath queries.

Here’s what it looks like:

=IMPORTXML(URL, xpath_query, locale)

- URL: The URL of the webpage.

- xpath_query: An XPath query to select the desired data.

- locale: A language and region locale code to use when parsing the data. If unspecified, the document locale is used.

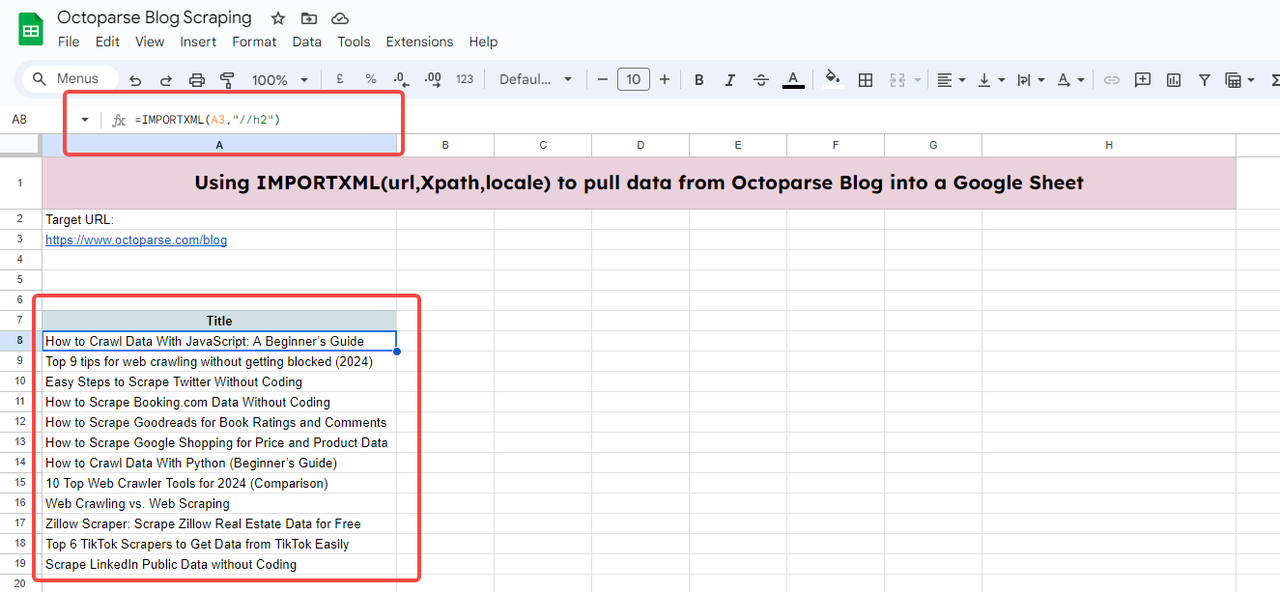

Below, I will show you an example of how to scrap the titles of the latest articles from the Octoparse Blog.

To do this, first, you need to create a new spreadsheet or open an existing one. Then click on the cell where you want the imported data to appear and enter the URL and the XPath of the element that you want to extract.

You would need to get the Xpath of the titles of the articles by inspecting(right click and you will see inspect) the target element in the website.

Here,

- URL: https://www.octoparse.com/blog

- XPath Query: //h2

//h2 means the exact Xpath of all the blog titles on page one of the Octoparse blog.

So the complete function would be:

=IMPORTXML(“https://www.octoparse.com/blog”, “//h2”)

Enter the function in the cell where you want the imported data to appear. You might also need to click access data to allow Google Sheets to import data from the outside.

After a few seconds of loading, you will get all the titles of page one blog.

Tips:

- You can also replace the URL with a cell as long as you put the URL into the cell. Here, see A3.

- Remember to add quotation marks when you put the Xpath and the URL. If you replace them with cell content, then no quotation marks are needed.

- If there are already quotation marks, the quotation marks inside the Xpath need to be single. For example, “//div[@class=’author’]/span”. Wrong spelling like “//div[@class=”author”]/span” leads to an error.

- You can ignore the “Locale” if you want the document locale.

- If you are not familiar with XPath, find the XPath cheat sheet.

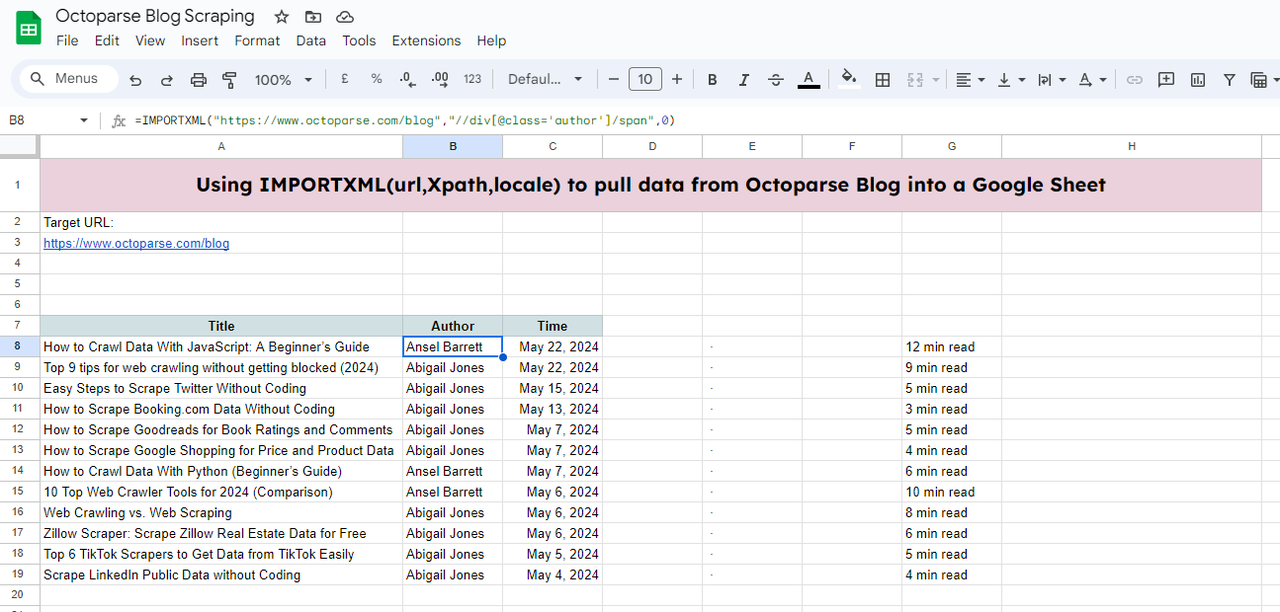

You can continue to fill the sheet with other data by following the same steps above.

Example Google sheet: https://docs.google.com/spreadsheets/d/18Tkp6rP9p1Az_AlXyOJlrqVxwESpTDOf7MSIweZnOD0/edit#gid=0

Method 2: Using ImportHTML in Google Spreadsheets

The IMPORTHTML function in Google Sheets is designed to fetch data from tables and lists within HTML pages. To use it, you would need the target website’s URL and the query, which is either “table” or “list”.

The function would look like this:

=IMPORTHTML(url, query, index)

- url: The URL of the webpage containing the data.

- query: “table” or “list”, depending on what you want to extract.

- index: The position of the table or list on the webpage (starting from 1).

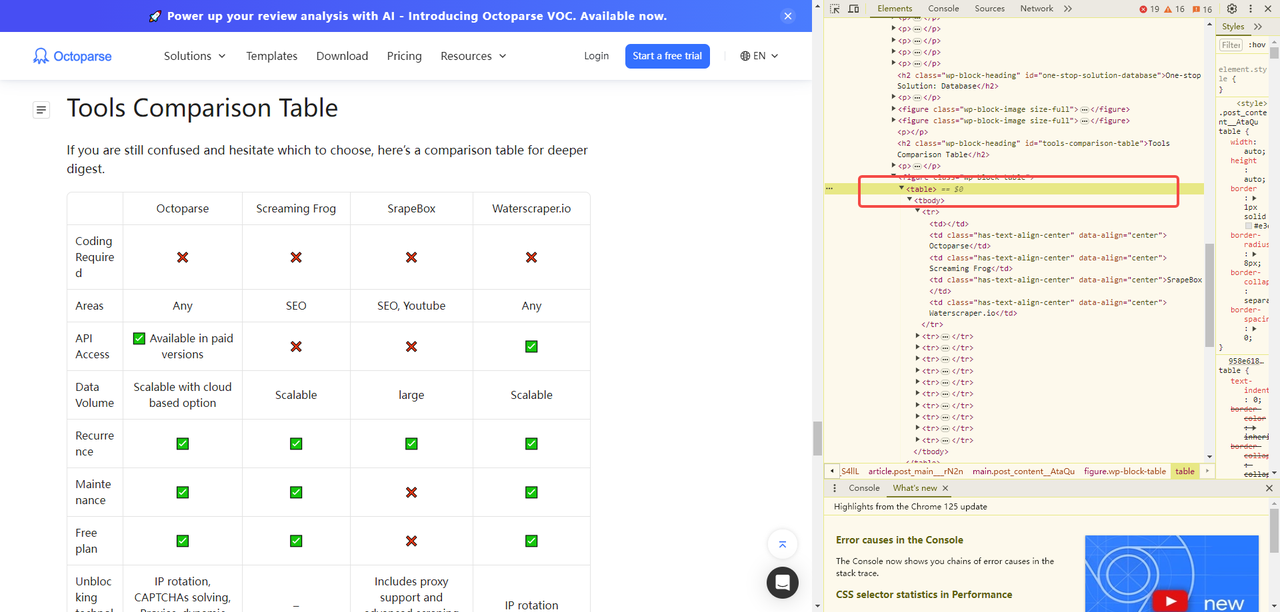

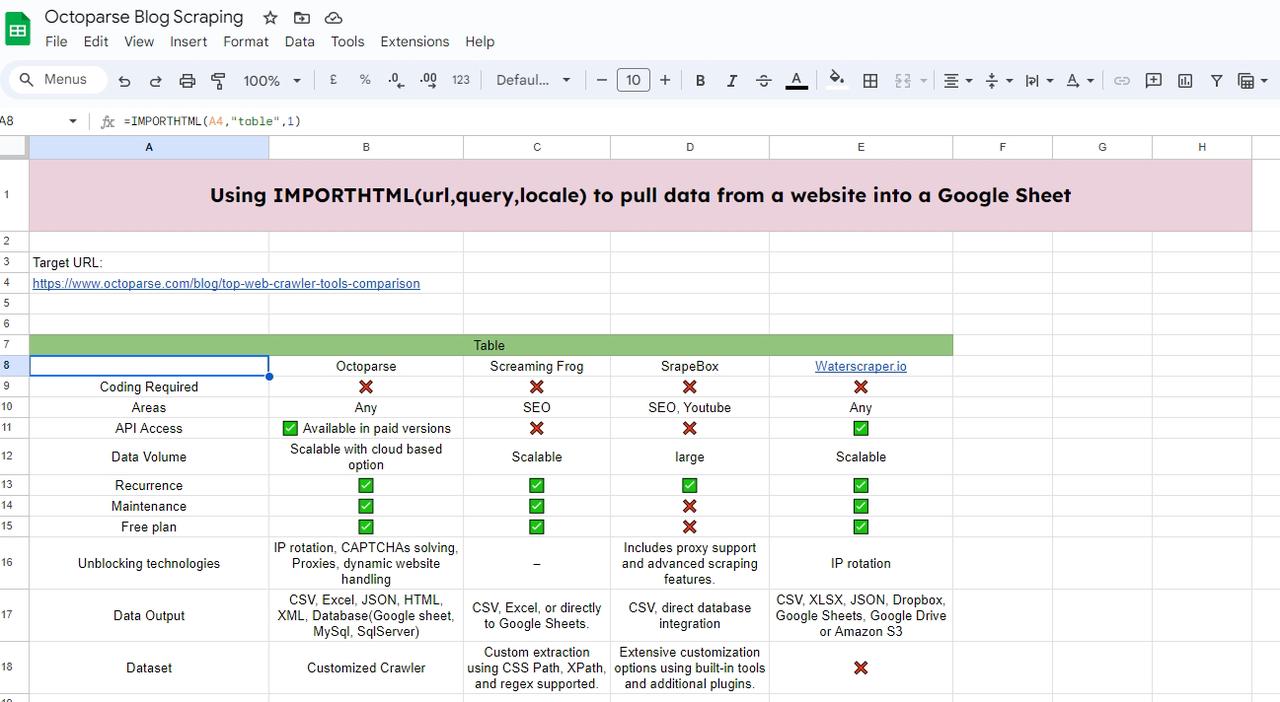

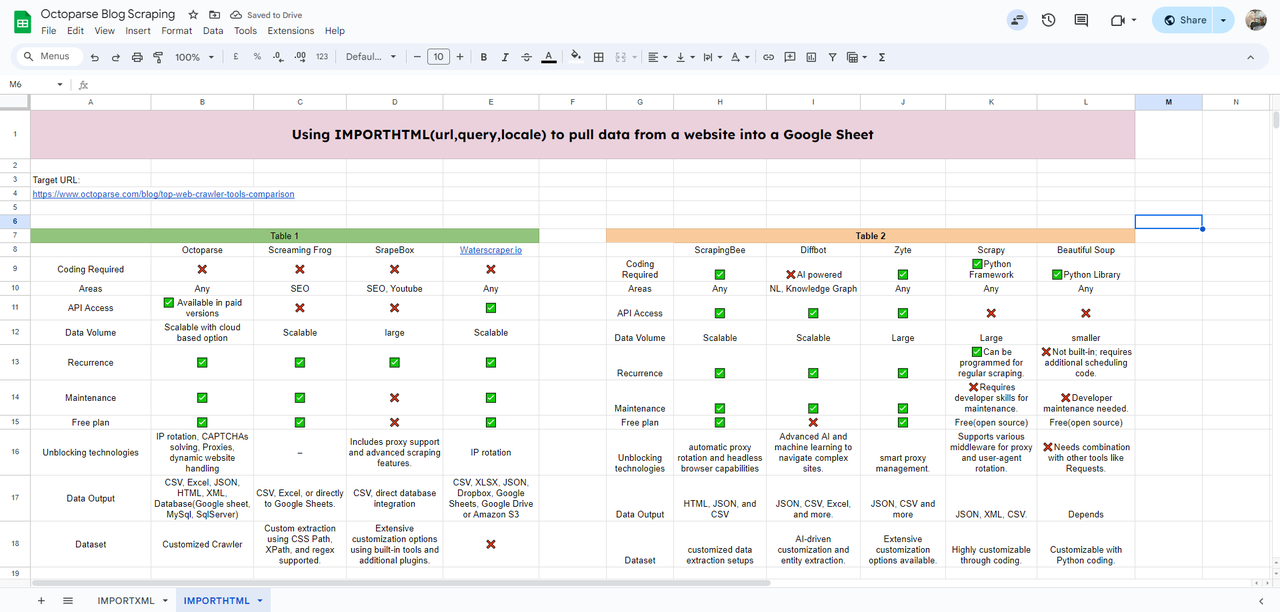

In this example, I will scrape a table from one of Octoparse’s blogs.

To begin with, first create a new sheet and then open the target URL.

Here, you can see the HTML that our target table is labeled <table>; If you want to scrape list, then the label would be <list>.

To scrape the table from the blog, we need to enter the function IMPORTHTML into the cell where we want the imported data to appear.

Enter:

=IMPORTHTML(“https://www.octoparse.com/blog/top-web-crawler-tools-comparison”, “table”, 1)

Then you will have the table loaded.

You can continue scraping by changing the table index to 2, so you will get the second table in the blog.

Tips:

- You will need to add double quotation marks to the query.

- You can replace the URL with a cell where the URL is populated.

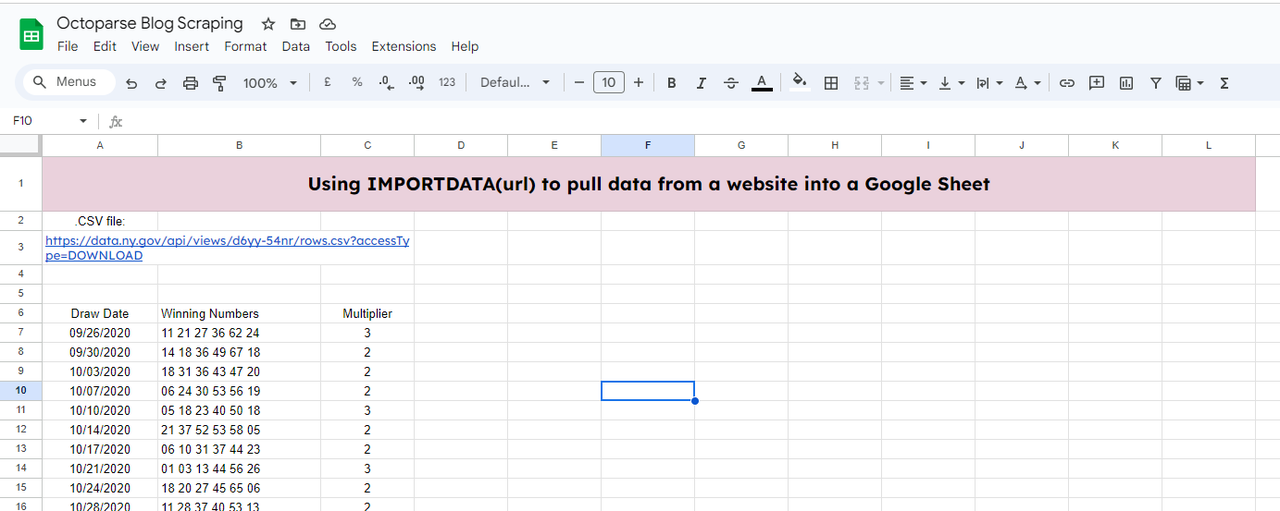

Method 3: Using ImportDATA in Google Spreadsheets

The IMPORTDATA function imports data from a given URL in CSV (Comma-Separated Values) or TSV (Tab-Separated Values) format directly into Google Sheets.

All you need is just one url of your target website. Simple, is it?

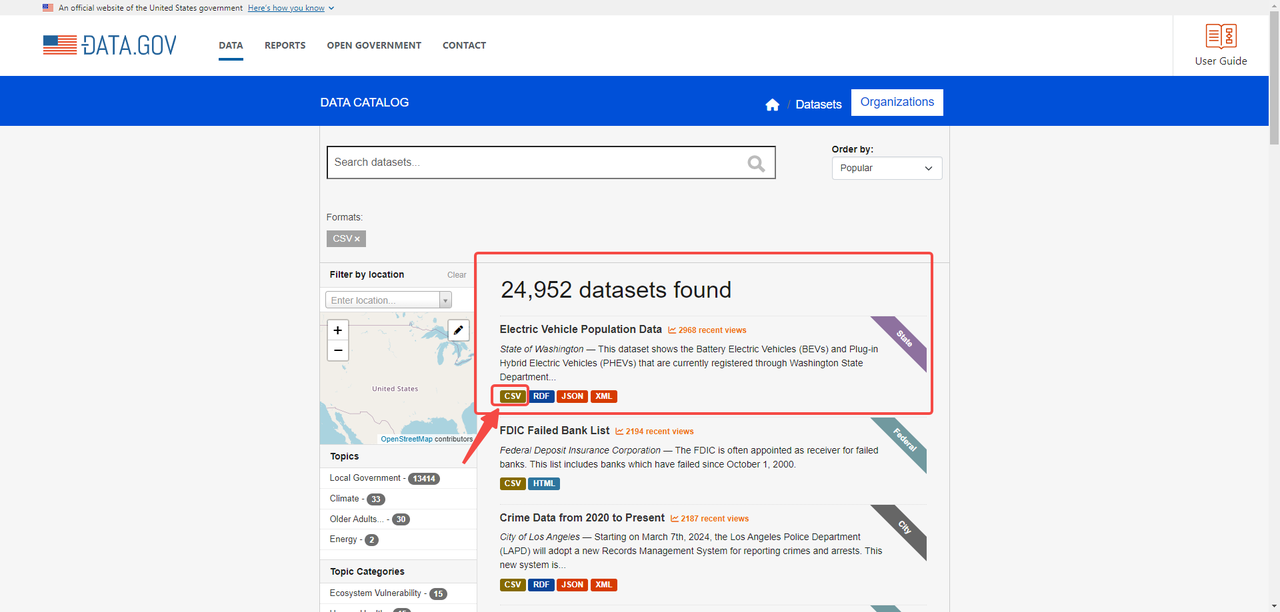

Let’s say we need to scrape data from the website: https://catalog.data.gov/dataset/?res_format=CSV

On the website, we can see the CSV file through the link: https://data.wa.gov/api/views/f6w7-q2d2/rows.csv?accessType=DOWNLOAD (hover to the button CSV and right click to copy the address)

To import the data into your Google Sheets, first, you would need to create a new sheet like what we did in the last two methods. And use the function below:

=IMPORTDATA(A3,”,”)

A3: is where your .csv file link is located

“,”: separates the data fields in the imported file

Then you will get the imported data.

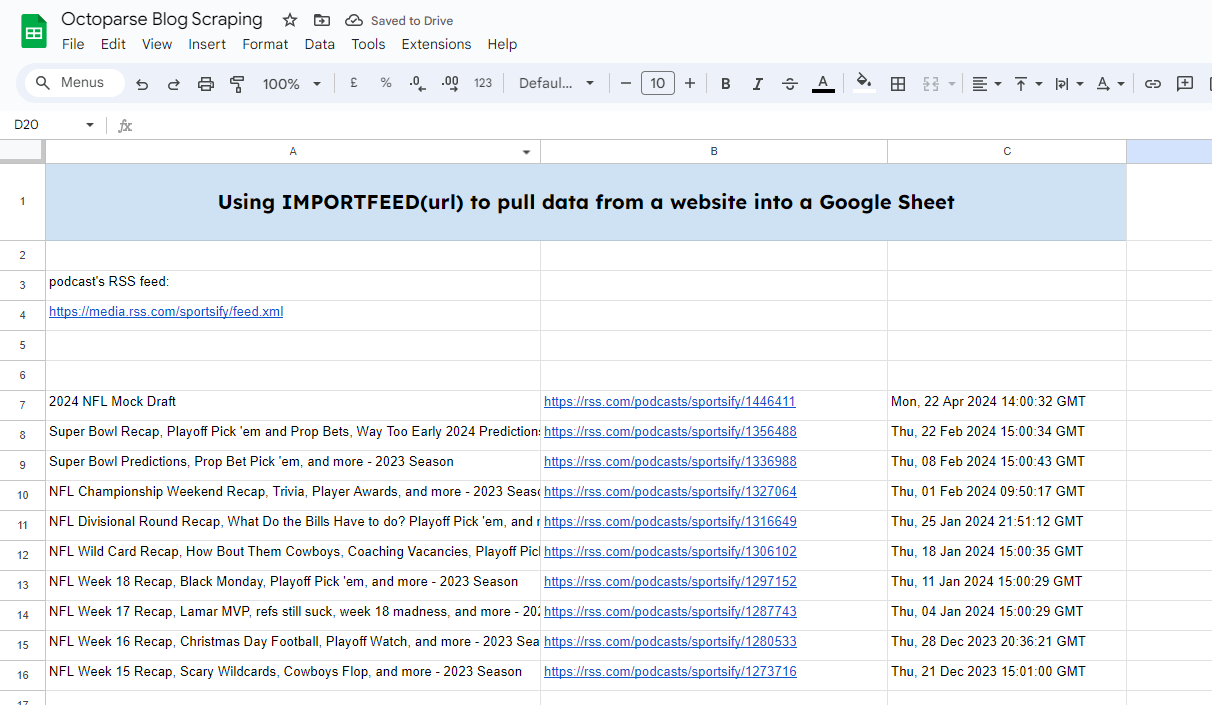

Method 4: Using ImportFeed in Google Spreadsheets

The IMPORTFEED function fetches RSS or ATOM feed data from a given URL.

It looks like this:

=IMPORTFEED(url, [query], [headers], [num_items])

- url: The URL of the RSS or ATOM feed.

- query: [Optional] A query to fetch specific items. Default is

""(empty string), which fetches all items. - headers: [Optional] Whether to include headers. Default is

TRUE. - num_items: [Optional] The number of items to fetch. Default is 20.

To do this, we will need a podcast’s RSS feed: https://rss.com/podcasts/sportsify/

Then enter the function:

=IMPORTFEED(A4,””,””,10)

Here I have input the RSS feed link in A4. And I want to pull 10 feeds out.

And you will get the result real quick.

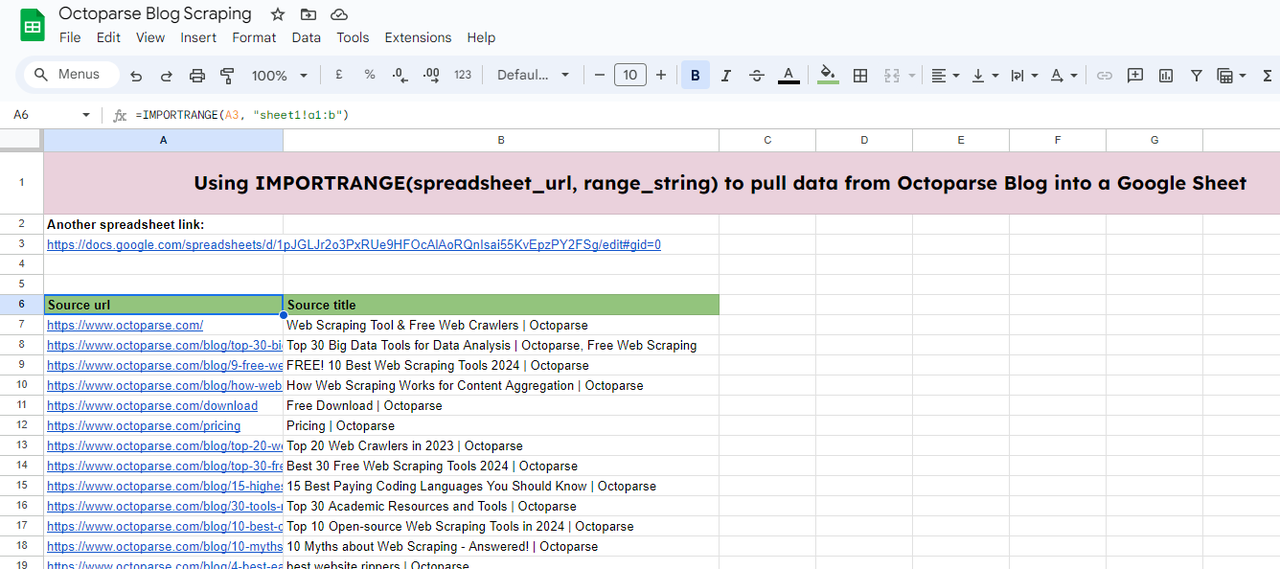

Method 5: Using ImportRange in Google Spreadsheets

The IMPORTRANGE function in Google Sheets allows you to import data from one Google Sheets spreadsheet into another. This is particularly useful for consolidating data from multiple sources or sharing data across different sheets while keeping them dynamically linked.

Here’s what it looks like:

=IMPORTRANGE(spreadsheet_url, range_string)

- spreadsheet_url: The URL of the spreadsheet from which you want to import data. This should be in quotation marks.

- range_string: A string that specifies the range of cells to import. This is typically in the format

"SheetName!A1:D10".

In this example, I need to import data from another spreadsheet(https://docs.google.com/spreadsheets/d/1pJGLJr2o3PxRUe9HFOcAlAoRQnIsai55KvEpzPY2FSg/edit#gid=0) to the one I am editing.

To do this, enter the function:

=IMPORTRANGE(A3, “sheet1!a1:b”)

- A3: is where the source data is stored.

- sheet1!a1:b: I need the data from sheet1 of the target sheet. And the data range is from A1 to B column.

Then, you can get the data after it finishes loading.

Limitations of Google Sheets in Web Scraping

Although these 5 functions simplify our way of importing data from websites to Google Sheets, there are many limitations.

Data Volume

First, these functions cannot import large volumes of data which involve pagination. As you can see in the IMPORTXML example, we can only scrape one page of data. If you need all the pages, you will need to do it by changing the URL continuously.

Dynamic Content

The above-mentioned functions can only scrape static and structured data. While dynamic data loaded with Javascript and AJAX is out of their reach.

Rate Limits

If you request the data too frequently, you may trigger Google’s rate limiting, preventing you from further data extraction temporarily.

Constant Maintenance

Since some functions rely on the HTML of the website to locate the data, once the structure of the website changes, your data will be affected and become invalid.

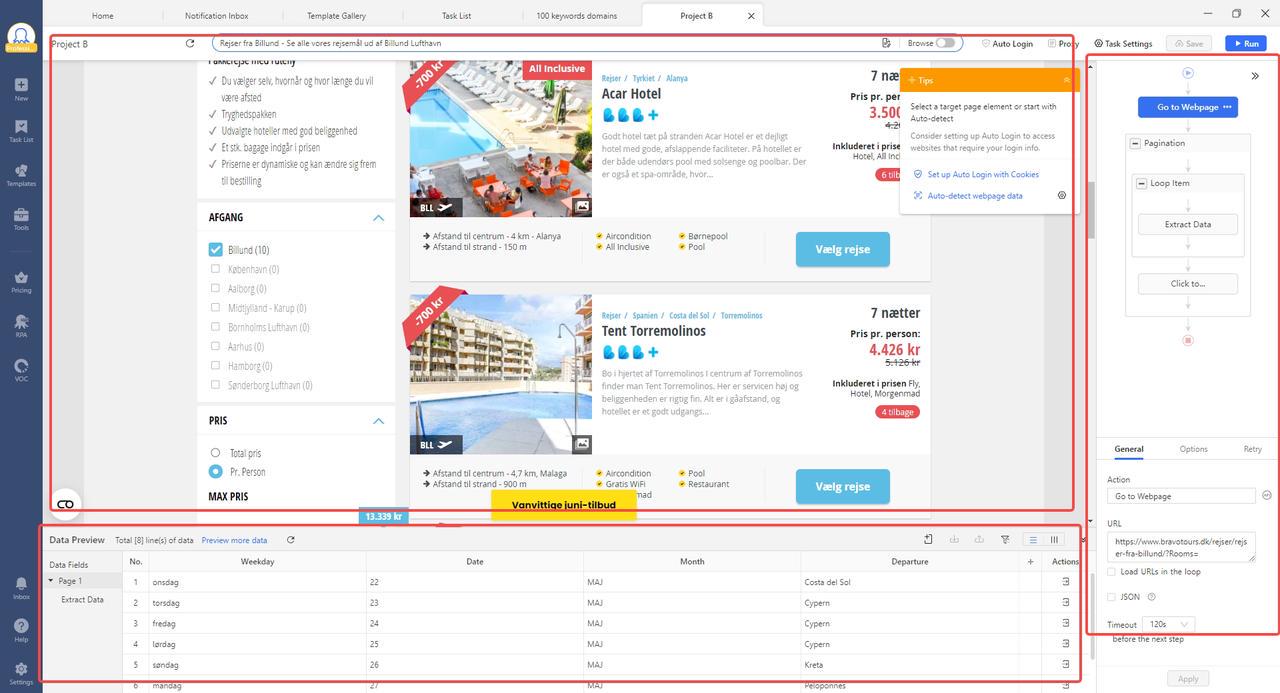

One-stop Automated Solution: No-code Web Scraping Software

If you need more than just a table or list of data, and you don’t want to handle script writing, then a no-code web scraping software like Octoparse might be your next perfect solution.

There are many pre-built scrapers (inside Octoparse) on the Internet that cater to the most common needs of our businesses and lives.

For example:

https://www.octoparse.com/template/google-maps-contact-scraper

There are many other scrapers for you to explore. Visit: Octoparse’s pre-built templates

With just a few parameters and clicks, and you can get all the data in one go without needing to do the tedious manual copy and paste.

If you have a more complex case to scrape, then you can use Octoparse’s custom scraping interface. In the interface, you can build your own automated workflow (scraping rules) the way you navigate the website. All of these are done by point and click and no code is involved thanks to the auto-detection features.

Other features you can get from custom scraping:

- Custom scrapers service and data service.

- Anti-blocking settings: CAPTCHAs, IP rotation, User agents, intervals, and Log-in.

- Complex site handled: infinite scrolling, dropdown, hover, retry, AJAX loading.

- Cloud extraction and data storage.

- Free plan and 14-day free trial for Standard and Professional plans.

- Pay-as-you-go residential proxies; 99 Million+ IPs from 155 countries.

- Highly responsive support team: 24/7 via live chat in intercom

- Third-party app integration via Octoparse RPA; API access.

Reading Octoparse Help Center if you still have any questions on scraping website data. If you’re looking for a data service for your project, Octoparse data service is a good choice. We work closely with you to understand your data requirements and make sure we deliver what you desire. Talk to an Octoparse data expert now to discuss how web scraping services can help you maximize efforts.