Supply chain management and logistics are the backbone of any successful business. We rely on transporting and delivering goods efficiently and cost-effectively. However, supply chain processes can be complex and data spread across sources. Web scraping can transform your logistics operations by automating data extraction from the web.

We’ll discuss how web scraping helps address supply chain challenges, what data you can scrape, and how Octoparse can automate your scraping workflows. Let’s begin.

The Challenges of Logistics and Supply Chain

Every day, as a logistician or supply chain specialist, you face complicated issues. The planning, implementation, and management of the movement and storage of products, services, and associated information from point of origin to point of consumption are referred to as logistics. Logistics, an essential component of supply chain management, employ transportation, storage, warehousing, material handling, and inventory management inside and between firms. You encounter a variety of challenges in your position.

Supplier reliability can be a constant headache

Your suppliers may be inconsistent with deliveries, lead times may change, and product quality can fluctuate. This disrupts your own operations and customer fulfillment.

Rising costs also put pressure on your margins

Transportation and inventory holding costs steadily increase due to factors outside your control. Ensuring you’re paying competitive rates and optimizing inventories is a full-time job.

Inventory management itself is challenging

You have to determine the correct stock levels, reorder points, and safety stocks for thousands of items. Inaccurate assumptions can lead to stockouts or excess inventory, both of which are costly.

Demand forecasting for your products is difficult yet critical

Overestimating demand results in waste, while underestimating leaves money on the table. Precise forecasts help you optimize production, transportation, and inventory investments.

All these issues would be much easier to solve with access to accurate and relevant data. However, data about suppliers, shipping costs, product prices, and competitor activities are often spread across various websites and portals.

There is no consolidated view of performance metrics and key indicators. Data must be manually collected, which is inefficient and error-prone at scale. This is where automated web scraping can help. By extracting structured data programmatically from the web, you can gain invaluable supply chain insights from external sources. This gives you a more holistic view of your end-to-end operations for improved logistics and supply chain management.

Web scraping automates extracting such structured and unstructured data from web pages. You can scrape details like supplier lead times, shipping costs, product specs, and pricing data from multiple websites. This gives you a unified view of critical supply chain metrics outside your enterprise systems.

What You Can Get with Web Scraping

There are many types of useful supply chain data you can scrape from websites to improve your logistics operations:

Supplier and vendor data

Scraping vendor websites can obtain crucial information like item numbers, product specs, minimum order quantities, lead times, and expected delivery schedules. Tracking changes in this data over time helps you ensure consistent performance from suppliers. You’ll know immediately if lead times increase, minimum order sizes go up, or new fees are instituted. This allows you to proactively manage suppliers or seek alternatives.

Product price comparisons

Scraping leading e-commerce sites daily can reveal how your product prices compare. You get a real-time view of whether your costs are too high relative to competitors. This information places you in a strong position when negotiating with suppliers. You’ll know precisely how much room you have to lower prices and stay competitive on sites like Amazon.

Transport and shipping data

Carrier websites often provide tracking numbers and shipment statuses in real-time. Scraping these portals programmatically extracts valuable data like current locations, estimated times of arrival, and delivery confirmations. This level of transparency into the movement of goods gives you the ability to proactively manage disruptions and exceed customer expectations.

Competitor insights

Scraping competitors’ websites for their product catalogs, specs, images, and prices arms you with critical benchmarking metrics. You can compare your own catalogs, product ranges, pricing structures, and supply chain processes to industry leaders. This external perspective reveals inefficiencies and opportunities for improvement within your own logistics operations and supply chain performance. With the right data, you can identify best practices and optimization strategies from competitors.

By automating the extraction of these different types of supply chain data, you gain the insights required to make impactful changes that elevate performance, reduce costs and strengthen your competitive advantage.

Collect A Ton of Data With Octoparse in Five Steps

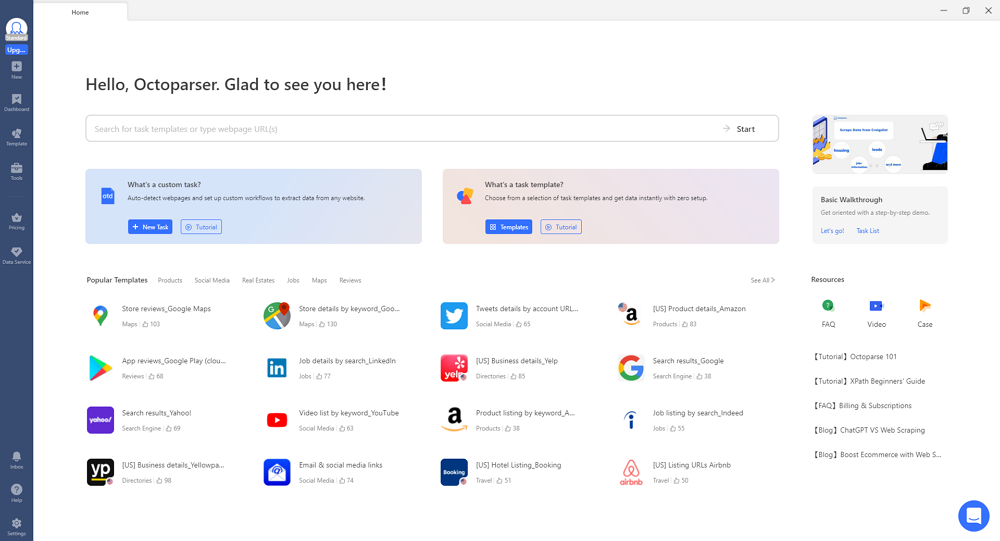

Octoparse is a powerful web scraping tool that can help you extract supply chain data at scale. Whether you’re an expert in coding or have no skills in coding, you can apply this tool in your daily work and grab thousands of lines of data with clicks.

You can download Octoparse and install it on your device first, and sign up for a free account to unlock the powerful features of Octoparse. Then, follow the steps below to grab the data you need.

Step 1: Create a New Task

Open Octoparse and create a new task. Copy and paste the URL of the logistics website you want to extract data from into the search bar. Octoparse will load the page in its built-in browser.

Step 2: Select the Data You Want to Extract

Once the page has loaded, click “Auto-detect webpage data” in the Tips panel. Octoparse will scan the page and highlight any extractable data related to logistics. You can then choose which data you want to extract, such as shipping information, tracking numbers, delivery dates, or any other relevant data.

Also, you can preview all the selected data fields in the Data Preview panel at the bottom, and remove or rename the data fields as you want.

Step 3: Create a Workflow

After selecting the data fields you want to extract, click “Create workflow” to build a workflow on the right-hand side of the screen. This workflow will show you every step of the scraper. You can preview how each action works and ensure that it meets your requirements.

Step 4: Run the Task and Export the Data

Once you’ve verified the information and workflow, click “Run” to start the scraper. You can choose to run the task locally or on Octoparse’s cloud servers. After the task has completed running, you can export the data into an Excel, CSV, or JSON file or even a database like Google Sheets.

By following these four simple steps, you can collect a ton of logistics data from various websites quickly and easily using Octoparse. Whether you’re a logistics company looking to streamline your operations or an individual looking to track your shipments, Octoparse can help you extract the data you need efficiently.

Wrap-up

Web scraping provides a means to acquire crucial external data that enhances logistics processes. Automating data extraction with Octoparse ensures you get accurate and timely supply chain metrics.

If you want to improve supply chain visibility, optimize costs, and reduce risks through web data, start scraping relevant web pages today. Try out Octoparse’s free trial to get started on automating your own logistic-optimized web scraping workflows.