A thorough awareness of the labor market and the ability to spot patterns depend on having access to accurate and current information on job postings. In this blog article, we’ll delve into the realm of web scraping and show you how to get information from job postings for useful labor market analysis. Utilizing the power of web scraping will enable you to gain priceless insights that will fuel your company.

Why is the job posting data valuable?

Job posting data serves as a goldmine of information for HR departments and research teams, offering invaluable insights into the labor market landscape. Let’s explore a few key points that highlight the significance of job posting data in labor market analysis:

Market Demand and Trends

Job posting data provides a real-time pulse on market demand and industry trends. By analyzing the frequency and types of job postings in specific sectors, organizations can gauge the demand for different skill sets and identify emerging roles. For example, an increase in job postings for data scientists and artificial intelligence specialists suggests a growing demand in the technology sector. These insights enable HR departments to align their talent acquisition strategies and ensure they have the right skill sets to meet market demands.

Skill Requirements and Talent Acquisition

Job postings outline the desired qualifications, skills, and experience sought by employers. Analyzing these requirements helps HR departments understand the evolving skill landscape and make informed decisions about talent acquisition. For instance, if job postings consistently highlight the need for digital marketing expertise, it indicates a shift toward online marketing strategies. Armed with this knowledge, HR departments can tailor their recruitment efforts and ensure they attract candidates with the desired skill sets, giving their organization a competitive edge.

Salary Ranges and Compensation Insights

Job postings often include salary information or salary ranges, providing valuable insights into market compensation trends. Analyzing this data allows HR departments to benchmark their salary offerings against industry standards and make competitive offers to attract top talent. Moreover, tracking salary ranges across different regions helps organizations understand the regional variations in compensation, enabling them to optimize their compensation strategies and stay competitive in the labor market.

Geographical Distribution and Workforce Planning

Job postings also provide geographical information, allowing organizations to understand the distribution of job opportunities across various locations. By analyzing the concentration of job postings in different regions, HR departments can make informed decisions about expanding their operations, establishing new offices, or targeting specific locations for talent acquisition. This data aids in strategic workforce planning, ensuring that resources are allocated optimally to meet regional market demands and growth opportunities.

How can web scraping help in collecting job posting data

Collecting job posting data manually poses several challenges that hinder efficiency and effectiveness. However, web scraping provides a solution that overcomes these hurdles, offering a range of benefits for HR departments and research teams. Let’s explore these challenges and the advantages of web scraping in more detail:

Time-Consuming and Resource-Intensive

Manually collecting job posting data requires significant human resources and consumes considerable time. HR departments and research teams need to scour multiple job boards, company websites, and social platforms to gather relevant postings. This process is not only time-consuming but also diverts valuable personnel from other critical tasks. Web scraping automates data extraction, allowing organizations to gather vast amounts of job posting data in a fraction of the time, freeing up resources for more strategic activities.

Lack of Consistency and Efficiency

Manual collection of job posting data often lacks consistency and efficiency. Human error can lead to discrepancies in data collection, such as missing or inaccurate information. Additionally, manually navigating through different websites and platforms introduces inconsistencies in the way data is extracted and organized. Web scraping ensures consistent and standardized data extraction by following predefined rules and extraction patterns. This uniformity enhances data quality and facilitates accurate analysis, enabling organizations to make informed decisions based on reliable data.

Volume and Analysis Challenges

The sheer volume of internet job posting data makes manual collecting and analysis a demanding process. Every day, thousands of job posts are published across several platforms, making it virtually difficult for HR departments and research teams to keep up. Manual data gathering limits the scope and depth of data analysis, reducing the insights available. Web scraping, on the other hand, allows businesses to swiftly and efficiently retrieve massive amounts of data. This wealth of data allows for more in-depth research, revealing relevant trends and patterns that might otherwise go missed.

Data Reliability and Integrity

Manual collection of job posting data is susceptible to human error, leading to potential data inaccuracies and inconsistencies. Additionally, data integrity may be compromised due to the reliance on subjective human interpretation. Web scraping provides a reliable and systematic approach to data extraction, ensuring data accuracy and integrity. By automating the process, organizations can eliminate human errors, reduce bias, and maintain a consistent and reliable dataset for labor market analysis.

Web scraping is a game-changer for HR departments and research teams, overcoming the challenges associated with the manual job posting data collection. By automating the data extraction process, web scraping saves time, enhances efficiency, ensures data consistency, enables large-scale analysis, and provides a reliable dataset for informed decision-making.

Implementing web scraping tools empowers organizations to leverage the vast amount of job posting data available online, unlocking valuable insights into market demand, industry trends, skill requirements, and geographical distribution. By embracing web scraping, HR departments and research teams can enhance their labor market analysis capabilities and gain a competitive edge in talent acquisition and resource planning.

Use Octoparse to Get Job Posting Data in Four Steps

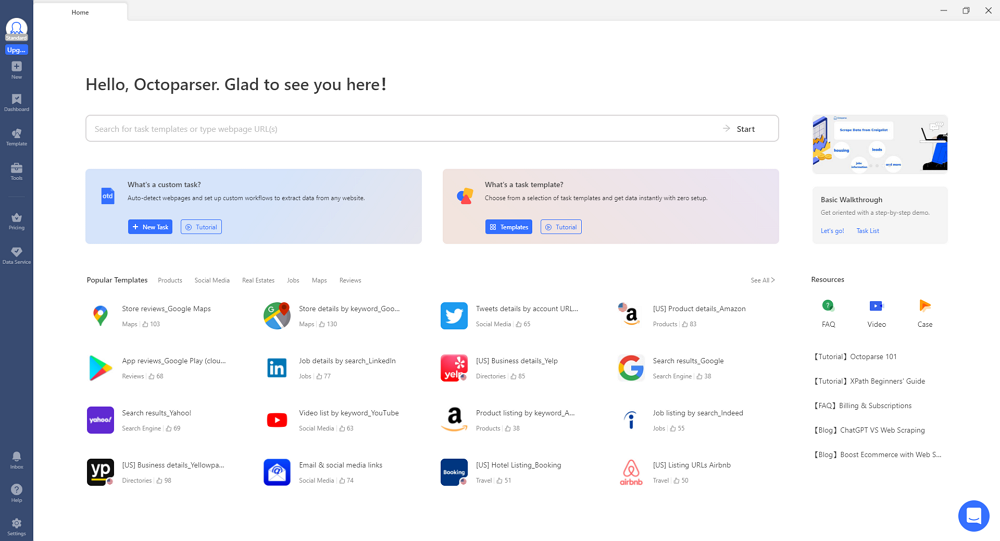

In this section, we will guide you through extracting job posting data using the user-friendly tool, Octoparse.

Octoparse eliminates the need for coding knowledge, making it accessible to users of all skill levels. Before we begin, ensure that you have downloaded and installed Octoparse on your device. When you launch the software, sign up for a free account to log in and get started.

For this example, we will demonstrate how to scrape salary reviews for Amazon from Glassdoor. The target URL is as follows:

Target URL: https://www.glassdoor.com/Salary/Amazon-Salaries-E6036.htm

Step 1: Create a New Task

Copy and paste the target URL into the search bar in Octoparse. Click on “Start” to create a new task. The page will load within Octoparse’s built-in browser.

Step 2: Select the Desired Data Fields

Once the page has finished loading, locate the “Auto-detect webpage data” option in the Tips panel and click on it. Octoparse will scan the page and highlight the extractable data for you. You can preview and confirm the detected data fields, ensuring Octoparse has accurately identified the desired information. At the bottom, you can edit the detected data fields, remove any unnecessary fields or modify their names.

For this case, you can extract data, such as job title, total pay, base pay, additional pay, and total pay range for each position.

Step 3: Create and Modify the Workflow

Once you have selected all the desired data fields, click on “Create workflow.” A workflow diagram will appear on the right-hand side, illustrating how the scraper functions. By clicking on each action in the diagram, you can preview the scraper’s behavior and ensure it aligns with your intentions.

Step 4: Run the Task and Export the Data

Verify all the information, such as selecting all the desired data fields, before proceeding. Click on “Run” to initiate the scraper. Octoparse offers two options for running your task. For small projects, running it on your local device is suitable. However, for larger projects like scraping a page with over 10,000 results, utilizing Octoparse’s cloud servers is recommended. Cloud servers are available 24/7 and can handle processing efficiently.

Octoparse will handle the rest when you make the choice. The only thing left to do is wait for the processing to be finished. When done, you may export the data to files like Excel, CSV, or JSON or to a database like Google Sheets.

You may use Octoparse’s ability to quickly extract job posting data from websites like Glassdoor by following these steps. This makes it possible to expedite your examination of the job market and gain insightful information from the data gathered.

Conclusion

In the ever-evolving job market, access to comprehensive and up-to-date job posting data is a game-changer for HR departments and research departments. Web scraping empowers organizations to harness the power of data, providing insights into market trends, talent demand, and competitive intelligence.

By leveraging tools like Octoparse, scraping job posting data becomes efficient, consistent, and scalable. It frees up valuable human resources, eliminates manual errors, and enables data-driven decision-making.

It’s time to embrace web scraping and unlock the potential of labor market analysis. Armed with the ability to extract job posting data effortlessly, you can gain a competitive edge, streamline recruitment processes, and make informed strategic decisions for your organization’s success. Start your web scraping journey today and unlock a world of possibilities!