You might already know that pricing affects whether customers choose you or your competitor. What you might not know is how to systematically track what everyone else is charging.

Large retailers employ entire teams for this. Amazon makes over 2.5 million price changes per day—roughly one change every ten minutes on competitive items. Walmart’s pricing team monitors thousands of SKUs across dozens of competitors. They see price drops the same day they happen.

You don’t need a team to do something similar. You need a system. And that system starts with price scraping.

In this article, you can learn about the top 3 free price scraping tools and the step-by-step guide to scraping price data without coding.

What Is Price Scraping, and How Does It Work?

Price scraping is exactly what it sounds like: automatically collecting pricing information from websites. You tell a tool which pages to visit and which numbers represent prices. The tool visits those pages on a schedule and records what it finds.

Global e-commerce sales reached $6.9 trillion in 2024, with over five billion internet users browsing, comparing, and purchasing online. That’s a lot of price points changing constantly—and a lot of competitive intelligence you’re missing if you’re not tracking it.

The concept is simple. The reason you’re searching for it—instead of just doing it—is that execution gets tricky fast.

E-commerce sites don’t hand you their pricing data in a spreadsheet. Prices are buried inside web pages, surrounded by images, reviews, and promotional banners. Extracting just the price, reliably, across hundreds of products, without getting blocked, requires either real technical skill or the right tools.

Most businesses end up in one of three places: they overpay for commercial data feeds, they assign a junior employee to manually check competitor sites (soul-crushing work that never gets done consistently), or they simply guess what competitors are charging.

None of those options are necessary anymore.

While price scraping can offer significant advantages in terms of market intelligence and competitiveness, it is essential for businesses to ensure that their scraping activities comply with legal regulations and the terms of service of the websites they are scraping to avoid potential legal issues related to web scraping and data usage.

Why Can’t I Just Use an Official API?

This is usually the first question, and it’s the right one to ask.

When people first explore price monitoring, they Google something like “Amazon API pricing data” hoping for an easy answer. And technically, Amazon does have an API—the Product Advertising API.

But here’s the catch: that API is designed for affiliate marketers, not competitive intelligence. It doesn’t expose real-time pricing for most products. It has strict rate limits. It requires you to drive qualifying sales to maintain access. And it only covers Amazon.

This pattern repeats everywhere. Walmart, Target, Best Buy—most major retailers either don’t offer public APIs or lock them behind partnership agreements. The prices you can see as a shopper aren’t available through any official channel.

This gap between “I can see it on the website” and “I can access it programmatically” is exactly why price scraping exists.

What Makes Price Scraping Difficult?

Two things stop most people before they start.

1. The technical barrier is real.

Writing a price scraper from scratch means understanding HTML, HTTP requests, browser automation, and error handling.

You can write a Python script that works beautifully on Monday and breaks on Thursday when the website tweaks its layout.

For someone without a programming background, the learning curve feels endless. For someone with a programming background, the maintenance feels pointless.

2. Websites actively resist scraping.

E-commerce sites don’t want bots visiting their pages. They implement CAPTCHAs, rate limits, IP blocking, and fingerprint detection specifically to stop you. A simple script that sends requests too quickly gets blocked within minutes. Getting around these defenses requires rotating proxies, browser emulation, and careful throttling—layers of complexity stacked on top of what was already a complex task.

I’m not telling you this to discourage you. I’m telling you because understanding the obstacles helps you choose the right approach. If you try to build this from scratch without knowing what you’re getting into, you’ll waste weeks and end up frustrated.

The good news: tools exist that handle both barriers for you.

To make scraping websites available for most people, I’d like to list several no-coding price scraping tools that can help you scrape price data and other commercial data into structured files efficiently and easily.

What Can You Actually Do With Price Data?

Collecting data feels productive, but data sitting in a spreadsheet doesn’t help anyone. The value comes from what you do with it.

The ROI is measurable. According to research from QL2, one office supply retailer calculated a 260% return on their competitive intelligence investment—$2.60 back for every dollar spent on price tracking. McKinsey research suggests companies using data-driven pricing strategies see 10-15% increases in margins and 5-10% boosts in sales.

But you don’t need complex analytics to capture value. Here’s what basic price data enables:

Understand where you actually stand. Are you the cheapest option on your bestsellers? The most expensive? Somewhere in the middle? Without data, you’re guessing based on the last time someone manually checked. With daily data, you know exactly where you’re positioned on every product.

Catch competitor promotions early. Your competitors don’t send you press releases when they drop prices. But if you’re tracking their pages daily, you’ll see a 20% price cut the same day it happens. That gives you time to decide: match it, ignore it, or run a counter-promotion.

Spot trends before they hurt you. A single price change is noise. A competitor systematically lowering prices across a category over three weeks is a strategic signal. You can only see that pattern if you have historical data.

Negotiate better with suppliers. When you’re asking for better margins, showing vendors what competitors charge at retail strengthens your case. “The market price is X” is more persuasive when X comes with a chart.

Feed smarter pricing systems. If you’ve graduated to dynamic pricing—or you’re considering it—competitor data is essential input. You can’t price intelligently in a vacuum.

Notice that none of this requires fancy analytics. A spreadsheet with thirty days of competitor prices, refreshed automatically, supports all of it.

Top 3 Price Scrapers You Cannot Miss

(Feel free to use this infographic on your site, but please provide credit and a link back to our blog URL using the embed code below.)

1. Octoparse

Octoparse is your best choice for price scraping. You can use it to scrape price data from most e-commerce websites, like Amazon, eBay, AliExpress, Etsy, Priceline, etc., and grab other information including product descriptions, ratings, reviews, and comments from these platforms. Users don’t need to know how to code because Octoparse provides the auto-detecting function and preset templates which help to scrape price data without coding needed.

However, Octoparse also provides advanced functions like IP proxies, CAPTCHA bypassing, cloud scraping, scheduled scraping, and more to help you get precious data or large-scale data.

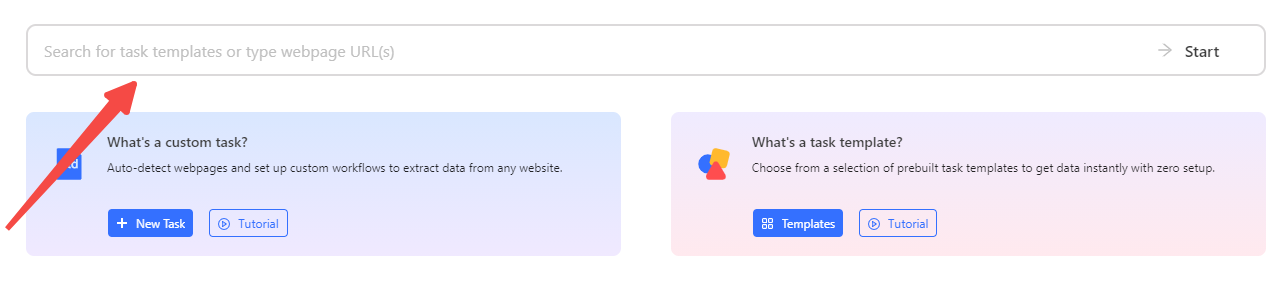

Method 1: Price scraping templates for hot sites

Octoparse provides a set of data scraping templates for popular E-commerce websites, including Amazon, eBay, Etsy, Flikpart, and others. With these templates, you just need to enter several parameters to start scraping price data. Try the following online price scraper to get Amazon price data.

https://www.octoparse.com/template/amazon-product-details-scraper

Method 2: Scrape price data with Octoparse manually

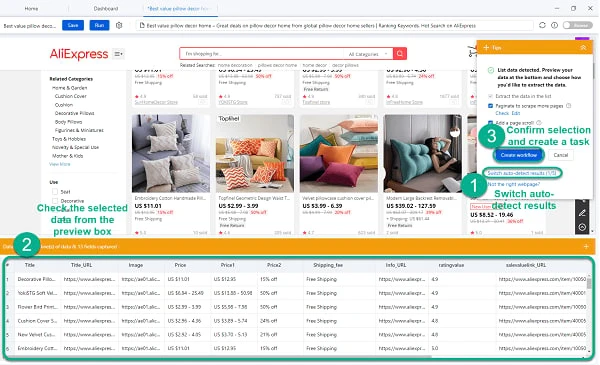

If the preset templates cannot meet your scraping needs, then you can customize your price monitoring by creating a crawler manually with Octoparse. Download Octoparse and follow the simple steps below to start.

Step 1: Copy and paste the target price page link.

Go to the web page you want to scrape, and enter the URL you want to scrape into the URL bar on the homepage. Click the Start button.

Step 2: Set the price data fields.

The auto-detection will start, and the price areas can be recognized automatically. Create a workflow after the auto-detection finish. Preview the data fields and make changes by using XPath, setting pagination, adding or deleting data fields, etc. The Tips panel will give you prompts to help you continue.

Step 3: Start scraping price data.

After all scraping settings have been checked, you can click on the Run button to start extracting data from the website. You can choose the local scraping mode or the cloud scraping mode to set a scheduled time you want.

Step 4: Download price data into Excel.

You can download the scraped data into any format you want, such as Excel, CSV, Google Sheets, etc. The database can also be connected with Octoparse.

Video tutorial: Scrape data at large scale

2. Import.io

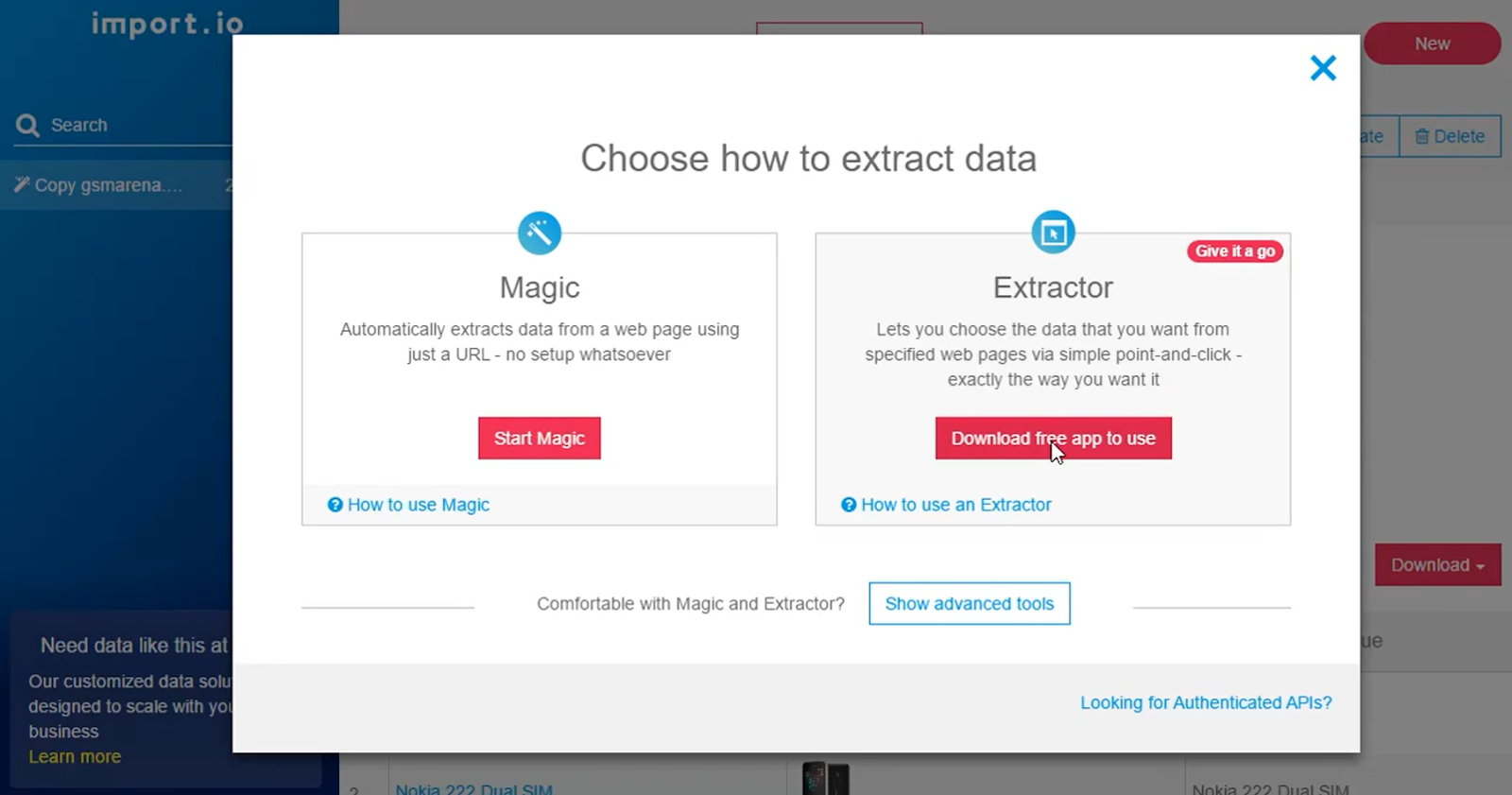

Import.io has been around longer than most visual scraping tools, and it shows—both in good ways and bad.

The “Magic” import feature is impressive when it works. You paste a URL, and it attempts to convert the page into a structured table automatically. For simple pages with clear tabular data, this can be faster than Octoparse’s workflow. I’ve had good results on simpler e-commerce sites and product listing pages.

The integration options are solid. Google Sheets, Excel, and direct API access all work as advertised. If you’re building a workflow that needs to pull data programmatically, Import.io’s API is well-documented.

What frustrated me: the desktop app.

For anything beyond basic pages, Import.io pushes you toward downloading their application, and the experience there feels dated compared to browser-based tools. The learning curve is steeper than it needs to be, and I found myself checking their tutorials more often than I’d like.

Pricing is also worth mentioning. They advertise a free tier, but the limits are tight enough that any serious price monitoring project will hit them quickly. The jump to paid plans is significant.

I’d consider Import.io if you’re already in their ecosystem or if their Magic tool happens to work well on your specific target sites. For starting fresh, I think there are easier paths.

3. ScrapeBox

ScrapeBox is a different animal entirely. It’s a desktop application that’s been a staple in the SEO community for over a decade, and it can technically be used for price scraping—but that’s not really what it’s built for.

The strength of ScrapeBox is bulk operations: harvesting URLs, checking page ranks, verifying proxies, scraping search results at scale. SEO professionals and marketers use it for competitive research, link prospecting, and (historically) some gray-hat tactics I won’t get into.

For price monitoring specifically, you can make it work, but you’ll be fighting the tool. There’s no visual point-and-click for defining price fields. You’re working with patterns and configurations that assume you know what you’re doing. The proxy management is powerful—thousands of rotating IPs—but setting it up requires real effort.

I wouldn’t recommend ScrapeBox as your first price scraping tool. But if you’re already using it for SEO work and want to add price monitoring without adopting another platform, it’s capable enough. Just expect to spend time configuring rather than extracting.

For a deeper comparison of price monitoring options, see: Best Price Monitoring Tools

How Do I Get Started With Price Scraping?

Let me walk you through what the first week actually looks like.

Start by defining what you’re tracking. Not every product deserves monitoring. Focus on your bestsellers, your highest-margin items, and products where you’ve lost sales and suspect pricing was the reason. Fifty to a hundred products is plenty to start. Resist the urge to track everything—you can always expand later.

Gather the URLs. For Amazon, this means product detail pages (the URLs with “/dp/” and an ASIN). For other retailers, it’s whatever page shows the current price. You can collect these manually or export them from your inventory system if you’re monitoring products you also sell.

Pick your tool and build your first scraper. I’ll talk about specific tools in a moment, but the process is similar across platforms. You paste a URL, let the tool scan the page, verify it identified the right price element, and apply that pattern to your full list.

Run it, review the output, and fix what broke. Your first extraction will probably have issues. Some pages might fail. Some prices might pull incorrectly. That’s normal. Adjust the configuration based on what you see.

Set a schedule and connect the output. Once extraction works reliably, automate it. Daily is the most common frequency. Connect the results to wherever you’ll actually use them—a Google Sheet, a dashboard, or your pricing system directly.

By the end of week one, you’ll have a working system. It won’t be perfect. You’ll refine it over time. But you’ll be making pricing decisions with actual data instead of assumptions.

What’s the Best Tool for Price Scraping?

I’ll be direct: Octoparse is what I’d recommend for most businesses getting started with price scraping.

The visual interface actually works. You click on price elements, and it figures out the extraction pattern. Auto-detection handles most standard e-commerce layouts without manual configuration. Cloud scraping means nothing runs on your machine. Scheduled scraping handles the “do this every day without me thinking about it” requirement.

The template library is worth calling out specifically. For Amazon, eBay, Etsy, and other major platforms, Octoparse has pre-built scrapers (templates) ready to go.

You enter your search terms or product IDs, and it handles everything else. For common use cases, this cuts setup from hours to minutes.

For more advanced scenarios—variant pricing, geographic differences, or integrating scraped data directly into your systems—Octoparse offers API access to trigger runs and pull results programmatically. That matters once you’re past the experimental stage and price monitoring becomes part of your daily operations.

For most price monitoring use cases, though, it’s the right starting point.

Where Octoparse fits less well: if you need data within seconds of requesting it (true real-time), Octoparse isn’t built for that. It’s designed for scheduled extraction, not on-demand API calls. And also for sites with extremely aggressive bot detection that require highly customized scraping solutions.

For related guides on specific platforms and use cases, check out:

- How to scrape Amazon pricing data

- Google Shopping price scraping

- Price scraping for e-commerce startups

- Tracking property prices with web scraping

Turn website data into structured Excel, CSV, Google Sheets, and your database directly.

Scrape data easily with auto-detecting functions, no coding skills are required.

Preset scraping templates for hot websites to get data in clicks.

Never get blocked with IP proxies and advanced API.

Cloud service to schedule data scraping at any time you want.

Final Thoughts

Price scraping is an essential strategy for businesses looking to stay competitive in today’s market. With the right tools, you can efficiently track and monitor price data from competitors, analyze market trends, and optimize your own pricing strategies.

The free price scraping tools discussed in this article, including Octoparse, provide great starting points for extracting valuable pricing information. Whether you’re new to web scraping or an experienced user, these tools can help you automate the data collection process and save valuable time.

Start using these solutions today to make more informed pricing decisions and enhance your business strategy.

FAQs

1. Is price scraping legal?

The short answer: scraping publicly visible pricing data for competitive intelligence is generally low-risk, but not zero-risk.

That said, terms of service matter. Most e-commerce sites prohibit scraping in their ToS, and violating those terms could theoretically expose you to civil liability—though enforcement against businesses doing reasonable competitive research is rare.

The principle to follow: scrape like a customer would browse. Don’t hammer servers with thousands of requests per second. Don’t collect personal data. Don’t bypass login walls. Stay within what a motivated human could do manually, just automated.

For a more detailed walk through for the legal landscape, try “is web scraping legal?“

2. How do I handle prices in different currencies?

This trips people up more than the scraping itself.

You’ll scrape a price from a UK site and get “£299.” From Germany, “€349.” From Japan, “¥44,800.” Now you need to compare them, and suddenly you’re dealing with currency conversion, exchange rate fluctuation, and the question of when to convert.

The cleanest approach I’ve found: store the raw price and currency code exactly as scraped, then convert to your base currency at analysis time using that day’s exchange rate. This preserves the original data and lets you re-run comparisons with updated rates later.

For the conversion itself, free APIs like exchangerate-api.com or Open Exchange Rates handle the lookup. If you’re working in Google Sheets, the GOOGLEFINANCE function pulls live rates directly. In Excel, you’ll need a plugin or a manual rate table.

One trap to avoid: don’t assume the price you see is the price a local customer pays. VAT inclusion varies by country. Display currencies don’t always match checkout currencies. A “€349” price might actually charge a German customer €349 but a French customer €362 after VAT adjustment. If precision matters, you may need to scrape deeper into the checkout flow—or accept that your cross-border comparisons are approximate.

For most competitive intelligence, same-currency comparisons within a single market are more actionable than trying to normalize global pricing perfectly.

3. Can I scrape prices from websites that require login?

Technically, yes. Practically, it gets complicated.

Most visual scraping tools, including Octoparse, support authenticated scraping. You provide your login credentials, the tool signs in, and then scrapes pages that are only visible to logged-in users. This works for wholesale portals, distributor sites, and membership-based pricing that you have legitimate access to.

The legal picture changes, though. Scraping public pages is well-established territory. Scraping behind a login means you’ve agreed to terms of service to get that access—and those terms almost certainly prohibit automated data collection. You’re no longer in “publicly visible data” territory. You’re using credentials to access a restricted system, which raises different legal questions.

My approach: if it’s a site where I have a genuine business relationship—a supplier portal, a wholesale account—I’ll scrape it for my own operational needs. I wouldn’t scrape a competitor’s dealer portal using credentials I shouldn’t have. The line is whether you’re automating access you legitimately possess versus circumventing access controls.

If B2B pricing intelligence is critical to your business and you’re unsure where the line is, that’s a conversation for a lawyer, not a blog post.

4. What do I do when my scraper breaks?

It will break. Every scraper breaks eventually. The question is how quickly you notice and how painful the fix is.

Scrapers break for a few predictable reasons:

- The website redesigns and your selectors no longer match anything.

- The site adds or changes anti-bot measures.

- A product goes out of stock and the page structure changes.

- The site starts serving different content to detected bots.

Each failure mode looks different in your data—empty fields, error messages captured as “prices,” or jobs that simply return nothing.

The first defense is monitoring. Don’t just schedule scraping and forget it. Set up alerts for when extraction returns zero results, when data volume drops significantly, or when values fall outside expected ranges. A price of “$0.00” or “$999,999” should trigger a flag, not silently enter your database.

When something breaks, visual tools pay off. In Octoparse, I can check the log of my scraping task, see exactly which element is failing, and adjust the selector by pointing at the new location. That’s a fifteen-minute fix.

In a custom Python script, I’m digging through HTML changes, updating regex patterns, and hoping I didn’t break something else. That’s an afternoon.

The deeper fix is accepting that scraping is an ongoing relationship, not a one-time setup. Budget an hour a week for maintenance.

- Check that jobs are running successfully.

- Spot-check data quality.

- Update selectors before small drifts become complete failures.

Treat your scrapers like you’d treat any other piece of operational infrastructure—because that’s what they are.