In recent years, everyone has been talking about chatbots. Large language models (LLM) and AI have become new trending concepts. As a result, web scraping has garnered a lot of attention due to AI’s reliance on vast amounts of scraped data. However, web scraping is not as welcome for website owners on the hand. They apply many means of defense against scrapers. To avoid being blocked during data extraction, more and more web scraping solutions are equipped with IP proxies to improve the efficiency of scraping.

Why Do Websites Block Scrapers

A scraper to pull data from a website sends requests to the server to retrieve the HTML content of the page and analyze the HTML structure to extract the desired data. If the scraper sends exceeding 50 requests/minute to the server in a short time, it might overwhelm the server, which might even crash the site in the worst scenarios. That’s why 83% of e-commerce websites use diverse means to prevent scraping.

The most common way to block web scraping is to limit the access rate of any single IP. For instance, when a web scraper has made too many requests in a short period using a single IP address, the website will easily locate the IP and block any requests from the IP sooner or later. To address such a situation, we should avoid scraping a website with a single IP address. Thus, proxy servers play an essential role in anti-blocked.

What is Web Proxy and How it Works

A web proxy is a server that makes internet requests on your behalf. Instead of websites seeing your real IP address, they see the proxy server’s IP address. When you send a request through a proxy, it forwards your request to the target website, receives the response, and sends it back to you. This process hides your identity and location from the websites you visit. It helps to manage and filter requests, improve security, and cache data to speed up access.

When a computer connects to the Internet, it uses an IP address. It is similar to your home’s street address, telling incoming data where to go and marking outgoing data with a return address for other devices to authenticate. A proxy server is essentially a computer on the Internet that has an IP address of its own. If users use the proxy servers to make requests to web pages, all requests will go to the proxy server first, which will evaluate the request and forward it to the Internet. Likewise, responses come back to the proxy server, and then to the users. So, proxy servers provide varying levels of functionality, security, and privacy depending on your use case, needs, or company policy.

Why IP Proxy is Important for Web Scraping

As mentioned above, website owners usually block IP addresses by detecting too many requests from the same address in a within 5-10 minutes. If you use your IP to scrape data, the IP address is likely to be blocked, which will lead to data collection failure. IP proxy can solve such problems to a large extent.

Avoiding IP Blocking

In our testing, scrapers get blocked within 30 seconds on sites like LinkedIn and Amazon when making more than 10 requests per minute from a single IP.

This is because these websites monitor incoming requests and many block IP addresses that have suspicious behaviors, such as these bot like bahaviors:

- no JavaScript rendering

- linear crawling

- identical headers

- and like we mentioned above, sending more than 50 requests/minute to the server within 5-10 minutes.

This is when proxy come in. IP proxies allow scrapers to distribute requests across multiple addresses. The rotation of IP addresses helps evade detection and minimizes the risk of being blocked. For example, when one IP address reaches a request threshold and gets blocked, other proxies can continue making requests to guarantee scrapers work well.

Bypassing Geo-location Restrictions

Some websites restrict access based on users’ locations by detecting the IP address’s origin. If you need to gather data from websites with restricted access based on location or licensing, IP proxies can help by providing access from different geographic locations. You can use proxies to locate in the same region as the target website or a region where the content is accessible, and then you can bypass these Geo-location restrictions and access the data you need.

Maintaining Anonymity

Persistent scraping from a single IP address can make it easier for websites to identify and track the scraping activity, leading to potential legal or security issues. By contrast, proxies can maintain anonymity and reduce the risk of tracing the scraping activity back to the original source. Because proxies will mask your real IP address by making requests appear as if they come from the proxy’s IP.

Managing Request Rate

Many websites now have mechanisms to detect and mitigate excessive request rates. Proxies pools can solve this problem by allowing users to distribute requests evenly across multiple IP addresses. Therefore, you can manage the request rate effectively and avoid rate limiting and blocking.

With so many benefits, IP proxies will speed up the data collection process and handle projects targeting 10,000+ pages more efficiently. Many web scraping service providers have recognized this phenomenon and applied proxy features to their tools.

Octoparse – Web Scraping with Proxy Features

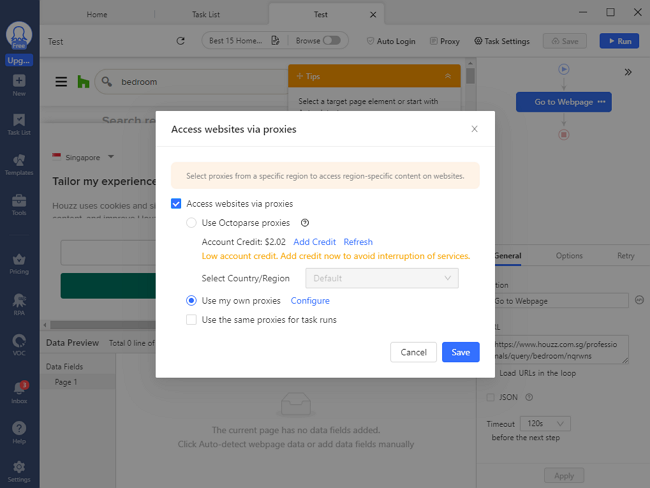

It’s always recommended to use a web scraping tool that runs with IP proxies. Especially when you need to scrape websites that use anti-scraping measures. Octoparse, as a popular web scraping solution, is offering IP proxy features.

Octoparse is a powerful free web scraping tool that can scrape most mainstream websites worldwide. Its cloud-based data extraction runs with a large pool of cloud IP addresses which minimizes the chances of getting blocked and protects your local IP addresses. When you scrape data using Octoparse, you can set up Octoparse built-in proxies. They are residential IPs that can work better in avoiding being blocked. You can even select IPs of a specific region or country for websites that are only accessible for particular locations. If you have your own IP proxies, you can use your proxies on Octoparse.

Tips:

To learn more about how to set up IP proxies in Octoparse, please check here.

You can also found in our blog Best Proxies for Web Scraping (Free vs Premium Options)

Wrap Up

What we have covered: Proxy servers solve the main problems that kill web scraping projects.

- They prevent IP blocking by rotating requests across multiple addresses.

- They bypass location restrictions by providing IPs from different countries.

- They maintain anonymity by hiding your real IP address from target websites.

- Most importantly, they let you control request rates to avoid detection while collecting large amounts of data.

Now you can set up IP proxies easily on Octoparse, and achieve your goal in data collection more efficiently. Try Octoparse now, and never get blocked again!