You may also be interested in below Octoparse blogs on PHP and web crawling:

Believe It Or Not, PHP Is Everywhere

Before getting started, I’ll give a quick summary of the definition of web scraping. Web scraping is to extract information from within the HTML of a web page. Web scraping with PHP is no different than using any other kind of computer language or web scraping tool, like Octoparse.

This article aims to illustrate how beginners could build a simple web crawler in PHP. If you plan to learn PHP and use it for web scraping, follow the steps below.

Web Crawler in PHP

Step 1

Add an input box and a submit button to the web page. We can enter the web page address into the input box.

Step 2

Regular expressions are needed when extracting data.

function preg_substr($start, $end, $str) // Regular expression

{

$temp =preg_split($start, $str);

$content = preg_split($end, $temp[1]);

return $content[0];

}

Step 3

String Split is needed when extracting data.

function str_substr($start, $end, $str) // string split

{

$temp = explode($start, $str, 2);

$content = explode($end, $temp[1], 2);

return $content[0];

}

Step 4

Add a function to save the content of extraction:

function writelog($str)

{

@unlink(“log.txt”);

$open=fopen(“log.txt”,”a” );

fwrite($open,$str);

fclose($open);

}

When the content we extracted is inconsistent with what is displayed in the browser, we couldn’t find the correct regular expressions. Here we can open the saved .txt file to find the correct string.

function writelog($str)

{

@unlink(“log.txt”);

$open=fopen(“log.txt”,”a” );

fwrite($open,$str);

fclose($open);

}

Step 5

A function would be needed as well if you need to capture pictures.

function getImage($url, $filename=”, $dirName, $fileType, $type=0)

{

if($url == ”){return false;}

//get the default file name

$defaultFileName = basename($url);

//file type

$suffix = substr(strrchr($url,’.’), 1);

if(!in_array($suffix, $fileType)){

return false;

}

//set the file name

$filename = $filename == ” ? time().rand(0,9).’.’.$suffix : $defaultFileName;

//get remote file resource

if($type){

$ch = curl_init();

$timeout = 5;

curl_setopt($ch, CURLOPT_URL, $url);

curl_setopt($ch, CURLOPT_RETURNTRANSFER, 1);

curl_setopt($ch, CURLOPT_CONNECTTIMEOUT, $timeout);

$file = curl_exec($ch);

curl_close($ch);

}else{

ob_start();

readfile($url);

$file = ob_get_contents();

ob_end_clean();

}

//set file path

$dirName = $dirName.’/’.date(‘Y’, time()).’/’.date(‘m’, time()).’/’.date(‘d’,time()).’/’;

if(!file_exists($dirName)){

mkdir($dirName, 0777, true);

}

//save file

$res = fopen($dirName.$filename,’a’);

fwrite($res,$file);

fclose($res);

return $dirName.$filename;

}

Step 6

We will write the code for extraction. Let’s take a web page from Amazon as an example. Enter a product link.

if($_POST[‘URL’]){

//———————example——————-

$str = file_get_contents($_POST[‘URL’]);

$str = mb_convert_encoding($str, ‘utf-8’,’iso-8859-1’);

writelog($str);

//echo $str;

echo(‘Title:’ . Preg_substr(‘/<span id= “btAsinTitle”[^>}*>/’,’/<Vspan>/$str));

echo(‘<br/>’);

$imgurl=str_substr(‘var imageSrc = “’,’”’,$str);

echo ‘<img src=”’.getImage($imgurl,”,’img’ array(‘jpg’));

Then we can see what we extract. Below is the screenshot.

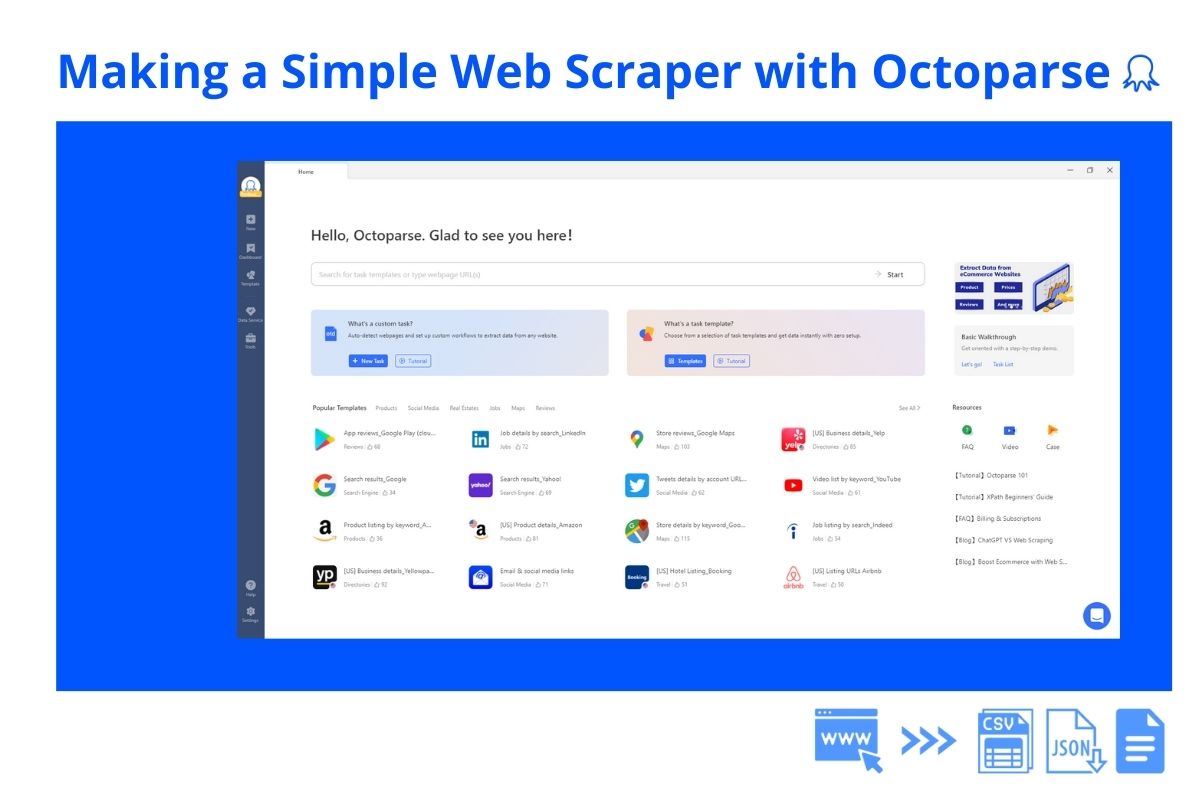

Web Crawling for Non-coders

You don’t need to code a web crawler anymore if you have an automatic web crawler.

As mentioned previously, PHP is only a tool that is used in creating a web crawler. Computer languages, like Python and JavaScript, are also good tools for those who are familiar with them. Nowadays, with the development of web-scraping tech, more and more web-scraping tools, such as Octoparse, Beautiful Soup, Import.io, and Parsehub, are emerging in a multitude. They simplify the process of creating a web crawler.

Take Octoparse Web Scraping Templates as an example, it enables everyone to scrape data using pre-built templates, with no more crawler setup, simply enter the keywords to search with and get data instantly.