What is a web scraping tool?

A web scraper can be easily understood as a tool that helps you quickly grab and turn any unstructured data you see on the web into structured formats, such as Excel, text or CVS. One of the most recognized values of a web scraping tool is really to free one from unrealistically tedious copy-and-pasting work that could have taken forever to finish. The process can be automated to the point where the data you need will get delivered to you on schedule in the format required.

There are many different web scraping tools available, some require more technical backgrounds and others are developed by non-coders. I will go into great depth comparing the top five web scraping tools I’ve used before including how each of them is priced and what’s included in the various packages.

So what are some ways that data can be used to create values?

- I’m a student and I need data to support my research/thesis writing

- I’m a marketing analyst and I need to collect data to support my marketing strategy

- I’m a product guru, I need data for competitive analysis of different products

- I’m a CEO and I need data on all business sectors to help me with my strategic decision-making process.

- I’m a data analyst and there’s no way I can do my job without data

- I’m an eCommerce guy and I need to know how the price fluctuates for the products I’m selling

- I’m a trader and I need UNLIMITED financial data to guide my next move in the market

- I’m in the Machine learning/deep learning field and I need an abundance of raw data to train my bots

There are so many more, literally countless reasons people may need data!

What are some of the most popular web scraping tools?

1. Octoparse

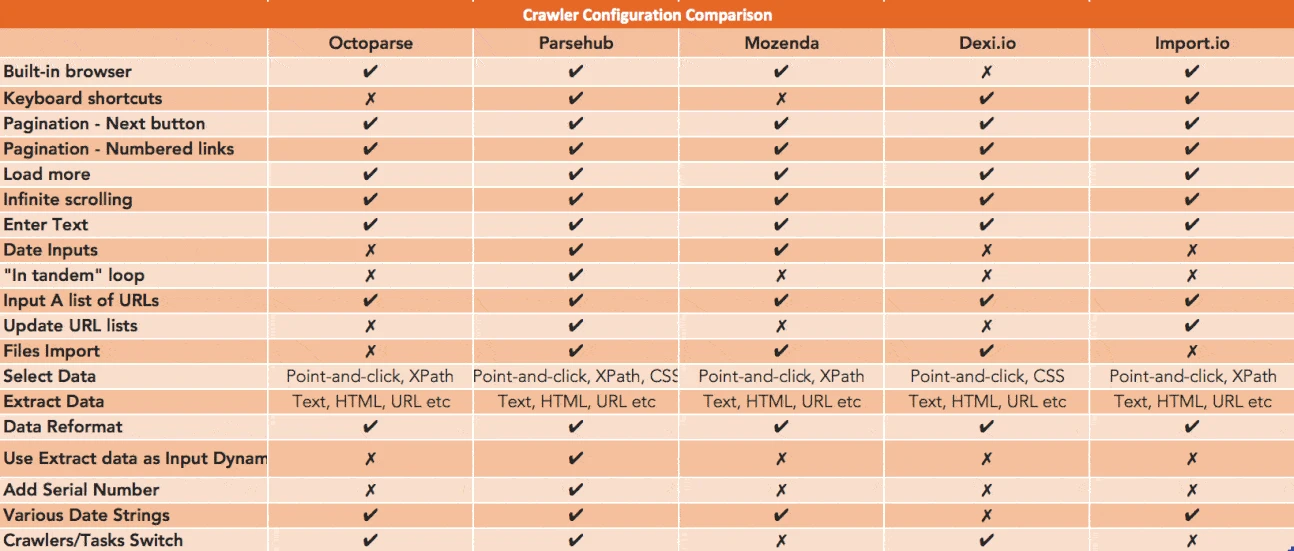

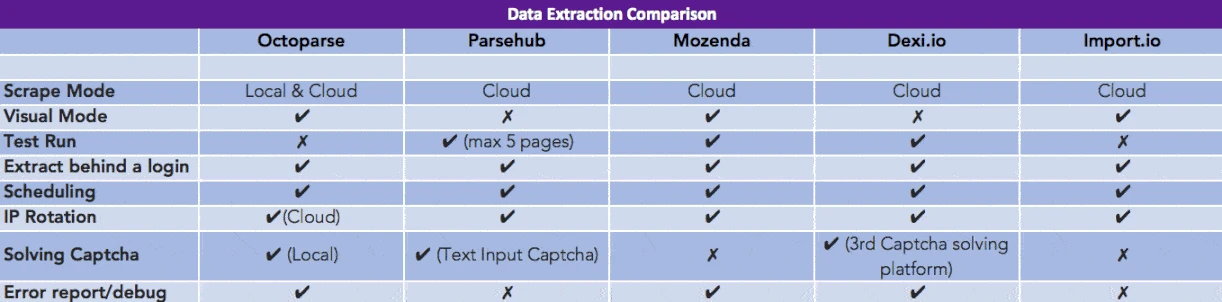

Octoparse is an easy-to-use web scraping tool developed to accommodate complicated web scraping for non-coders. As an intelligent web scraper on both Windows and Mac OS, it automatically “guessses” the desired data fields for users, which saves a large amount of time and energy as you don’t need to manually select the data. It is powerful enough to deal with dynamic websites and interact with any sites in various ways, such as authentication, text input, selecting from drop-down menus, hovering over dynamic menus, infinite scrolling and many more. Octoparse offers cloud-based extraction (paid features) as well as local extraction (free). For precise scraping, Octoparse also has built-in XPath and Regular Expression tools to help users scrape data with high accuracy.

2. Parsehub

Parsehub is another non-programmer friendly software. Being a desktop application, Parsehub is supported in various systems such as Windows, Mac OS X, and Linux. Like Octoparse, Parsehub can deal with complicated web scraping scenarios mentioned earlier. Although Parsehub intends to offer an easy web scraping experience, a typical user will still need to be a bit technical to fully grasp many of its advanced functionalities.

3. Dexi.io

Dexi.io is a cloud-based web scraper providing development, hosting and scheduling services. Dexi.io can be very powerful but does require more advanced programming skills compared to Octoparse and Parsehub. With Dexi, three kinds of robots are available: extractors, crawlers, pipes. Dexi supports integration with many third-party services such as captcha solvers, cloud storage and many more.

4. Mozenda

Mozenda offers a cloud-based web scraping service, similar to that of Octoparse cloud extraction. Being one of the “oldest” web scraping software in the market, Mozenda performs with a high-level of consistency, has nice looking UI and everything else anyone may need to start on a web scraping project. There are two parts to Mozenda: the Mozenda Web Console and Agent Builder. The Mozenda agent builder is a Windows application used for building scraping projects and the web console is a web application allowing users to set schedules to run the projects or access the extracted data. Similar to Octoparse, Mozenda also relies on a Windows system and can be a bit tricky for Mac users.

5. Import.io

Famous for its “Magic” – automatically turning any website into structured data, Import.io has gained in popularity. However, many users found out that it was not really “magical” enough to handle various kinds of websites. Besides that, Import.io does have a nice well-guided interface, supports real-time data retrieval through JSON REST-based and streaming APIs and it is a web application that can be run in various systems.

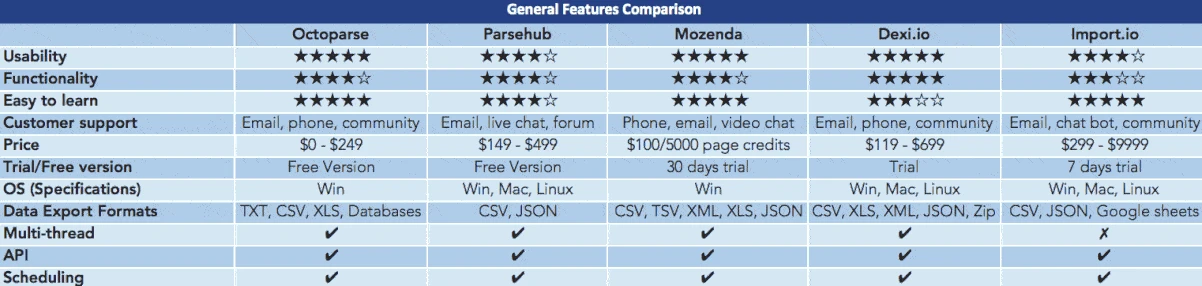

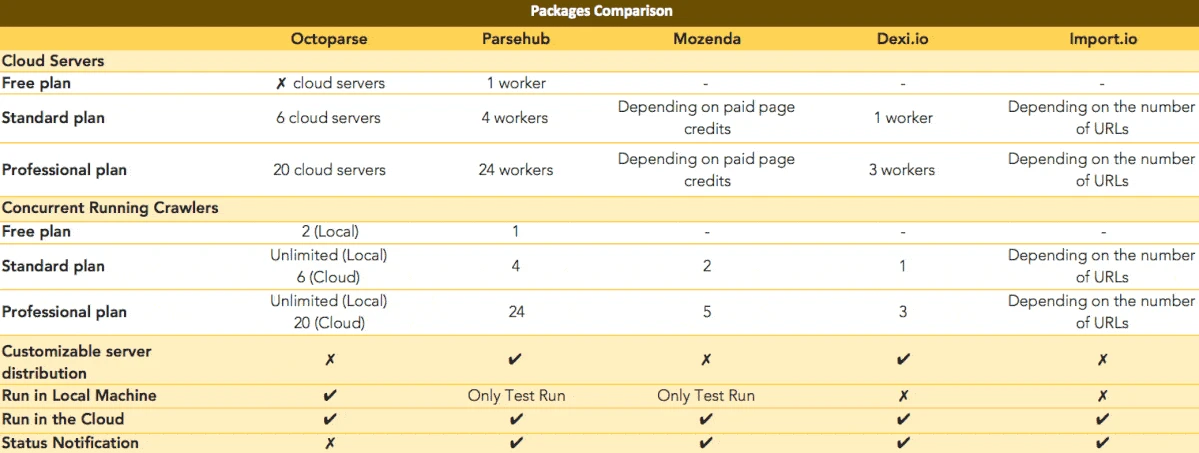

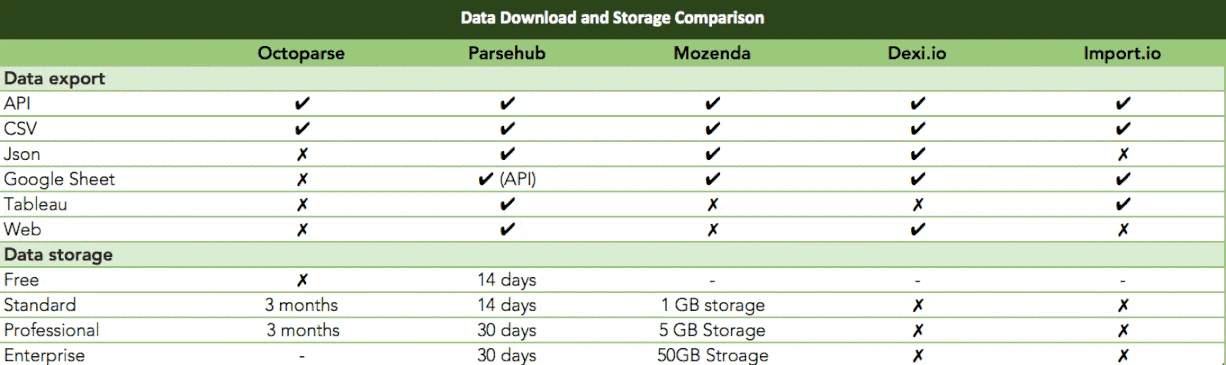

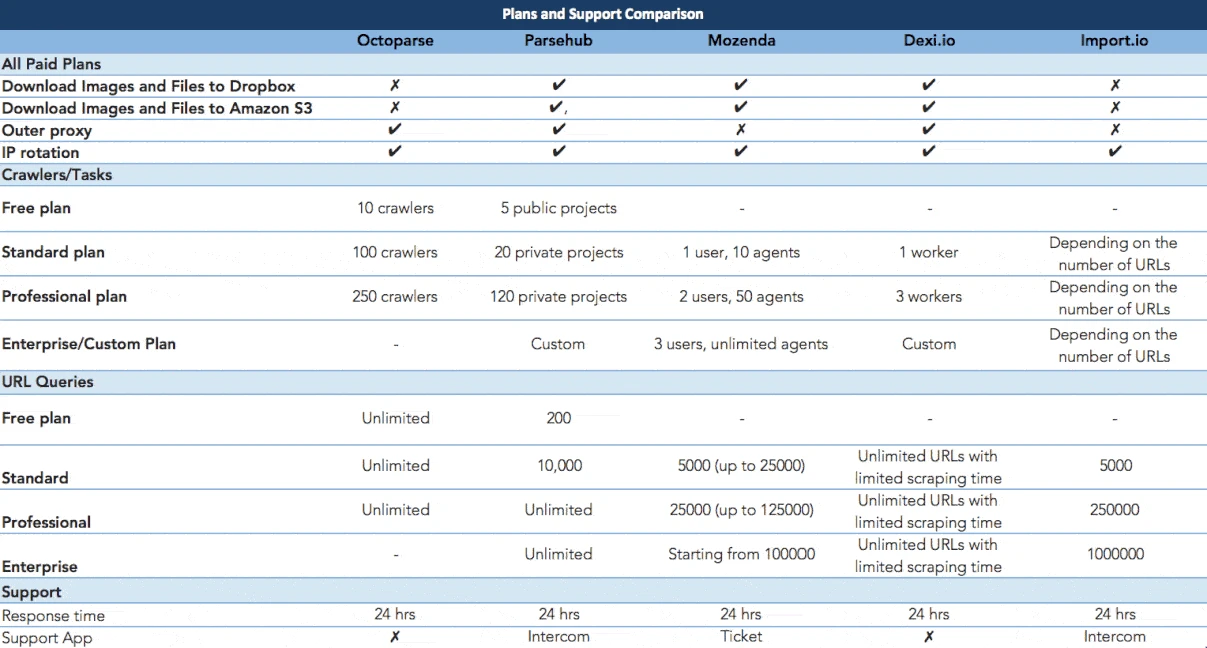

Detailed Feature-by-Feature Comparisons

Conclusion

There isn’t one tool that’s perfect. All tools have their pros and cons and they are in some ways or others more suited to different people. Octoparse and Mozenda are by far easier to use than any other scrapers. They are created to make web scraping possible for non-programmers, hence you can expect to get the hang of it rather quickly by watching a few video tutorials. Import.io is also easy to get started but works best with only a simple web structure. Dexi.io and Parsehub are both powerful scrapers with robust functionalities. They do, however, require some programming skills to master.

I hope this article gives you a good start to your web scraping project. Drop me a note for any questions. Happy data hunting!