Twitter, known as X now, is one of the most popular social platforms. You may very be interested in what famous people say on Twitter. It’s also an important platform to get business leads or market trends. So, how can you extract so much data into format files like Excel, CSV, Google Sheets, or even the database?

In this article, you can learn how to scrape Twitter data including tweets, comments, hashtags, images, etc. with the best Twitter scraper. You can finish scraping within 5 minutes without using API, Tweepy, Python, or writing a single line of code.

Is It Legal to Scrape Twitter

Generally speaking, it is legal as you scrape public data. However, you should always obey the copyright-protected policy and personal data regulation. The usage of your scraped data is your responsibility, you should pay attention to your local law. Read the web scraping legacy problem to learn more about this question.

If you still feel at risk about the legality or compliance, you can try Twitter API. It offers access to Twitter for advanced users who know about programming. You can get information like Tweets, Direct Messages, Spaces, Lists, users, and more.

Twitter Changes to X, What People Say

Twitter changed its logo from the iconic blue bird to the X on July 24, 2023. Now you can see the brand new X logo when visiting Twitter.com, and the new domain x.com now redirects to twitter.com. There are many trending topics like #Xeet and #Twitter”X” discussed on Twitter.

So, what do you think about Twitter rebranding to X, and what do other people say about it? Here are 3 tips we recommend you to scrape the news with Octoparse, the best web scraping tool.

Tip 1: Scrape Comments from Elon Musk’s Tweet

Elon Musk’s latest tweet says “Our headquarters tonight” and has almost 40k comments now. And the previous video about the new logo he tweeted has 47.5k comments already. It’s an important place to know what people say about the changes.

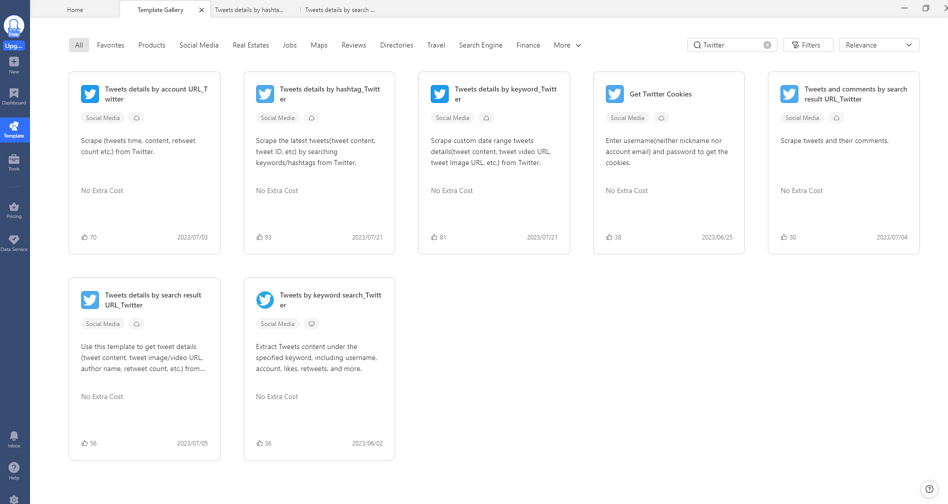

Octoparse provides two ways to scrape comments from Twitter. One is scraping all comments and replies manually by the Twitter URL, and the other is using a preset scraping template.

https://www.octoparse.com/template/tweets-comments-scraper-by-search-result-url

Tip 2: Get Tweets by Hashtag

You can scrape all the tweets under a specific hashtag, like #Xeet. You can find the scraping template on Octoparse, which is named Tweets details by hashtag_Twitter. With it, you can get the data, including the tweet URL, author name and account, post time, image or video content, likes, etc. Or you can scrape the tweets manually by setting up a workflow.

https://www.octoparse.com/template/twitter-scraper-by-hashtag

Tip 3: Get Twitter Search Results with a Keyword

If the above tips can’t meet your needs, you can search for a keyword yourself and download the search results. Similarly, you can use a preset template provided by Octoparse, named Tweets details by search result URL_Twitter. Or you can follow the steps below to scrape tweets yourself.

https://www.octoparse.com/template/twitter-scraper-by-keywords

Twitter Scraper: Extract Data from X Without Coding

After learning about the specific templates for Twitter data scraping, you can also try Octoparse software for free. It’s also easy to use with the AI-based auto-detecting function, which means you can extract data from Twitter without coding skills. Advanced functions like cloud scraping, IP rotation, CAPTCHA solving, and others can also be used in Octoparse to customize your Twitter scraping.

The public data like account profiles, tweets, hashtags, comments, likes, etc. can be exported as Excel, CSV, Google Sheets, SQL, or streamed into a database in real-time via Octoparse. Download Octoparse and follow the easy steps below to build a free web crawler for Twitter.

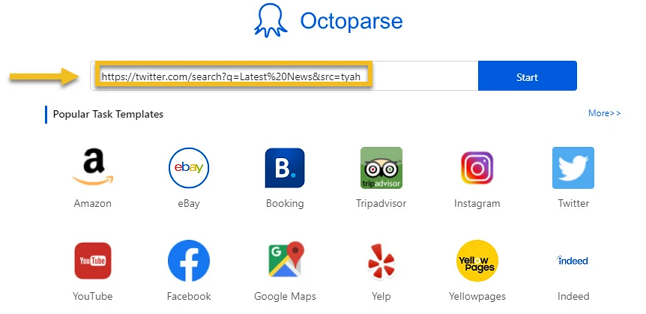

Step 1: Input Twitter URL and set up pagination

In this case, we are scraping the official Twitter account of Octoparse. As you can see, the website is loaded in the built-in browser. Usually, many websites have a “next page” button that allows Octoparse to click on and go to each page to grab more information. In this case, however, Twitter applies an “infinite scrolling” technique, which means that you need to scroll down the page to let Twitter load a few more tweets, and then extract the data shown on the screen. So the final extraction process will work like this: Octoparse will scroll down the page a little, extract the tweets, scroll down a bit, extract, and so on and so forth.

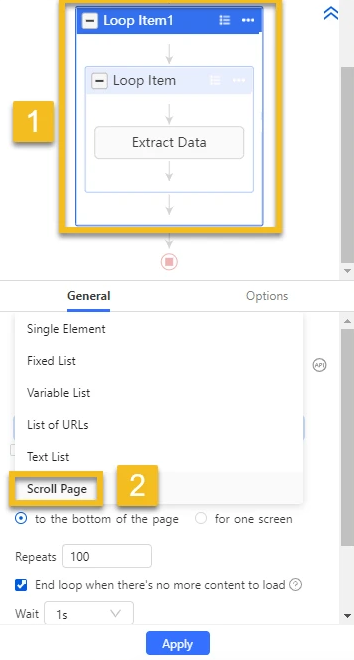

Step 2: Build a loop item

To tell the crawler to scroll down the page repetitively, we can build a pagination loop by clicking on the blank area and clicking “loop click single element” on the Tips panel. As you can see here, a pagination loop is shown in the workflow area, this means that we’ve set up pagination successfully.

Now, let’s extract the tweets. Let’s say we want to get the handler, publish time, text content, number of comments, retweets, and likes. First, let’s build an extraction loop to get the tweets one by one. We can hover the cursor on the corner of the first tweet and click on it. When the whole tweet is highlighted in green, it means that it is selected. Repeat this action on the second tweet. As you can see, Octoparse is an intelligent bot and it has automatically selected all the following tweets for you. Click on “extract text of the selected elements” and you will find an extraction loop is built into the workflow.

But we want to extract different data fields into separate columns instead of just one, so we need to modify the extraction settings to select our target data manually. It is very easy to do this. Make sure you go into the “action setting” of the “extract data” step. Click on the handler, and click “extract the text of the selected element”. Repeat this action to get all the data fields you want. Once you are finished, delete the first giant column which we don’t need, and save the crawler. Now, our final step awaits.

Step 3: Modify the pagination settings and run the Twitter crawler

We’ve built a pagination loop earlier, but we still need a little modification on the workflow setting. As we want Twitter to load the content fully before the bot extracts it, let’s set up the AJAX timeout to 5 seconds, to give Twitter 5 seconds to load after each scroll. Then, let’s set up both the scroll repeats and the wait time as 2 to make sure that Twitter loads the content successfully. Now, for each scroll, Octoparse will scroll down for 2 screens, and each screen will take 2 seconds.

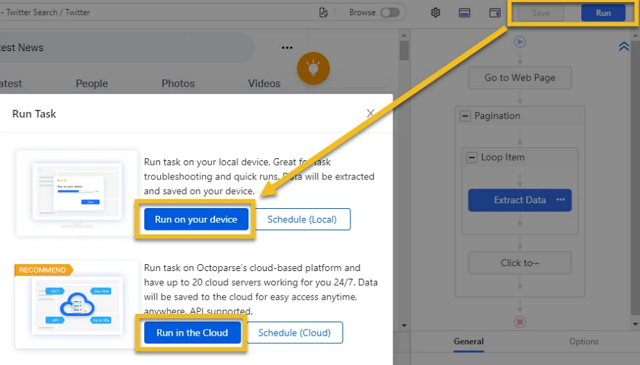

Head back to the loop item setting to edit the loop time to 20. This means that the bot will repeat the scrolling 20 times. You can now run the crawler on your local device to get the data, or run it on Octoparse Cloud servers to schedule your runs and save your local resource. Notice, the blank cells in the columns mean that there is no original data on the page, so nothing is extracted.

Video tutorial: Scrape Twitter data for sentiment analysis

Twitter Data Scraping with Python

You can scrape Twitter using Python if you’re good at coding. There are some accesses like Tweepy or Twint that you need to use during the process. You need to create a Twitter Developer Account and apply for API access, it only allows you to get tweets on a limitation. Twint allows you to scrape tweets without number limitation, you can learn more from this article on how to use Twint Python to scrape tweets.

Final Thoughts

Now, you have a general idea of Twitter web scraping with both coding and no-coding methods. Choose the one that is most suitable for your needs. However, Octoparse is always the best choice if you want to save time or energy.